Network capacity growth requires complex and expensive operations for planning, upgrade and maintenance, leading to continuous rising costs. Deploying additional revenue-generating services usually means additional hardware and longer time-to-market.

The only solution is to build and operate networks like cloud. Cloud providers, such as AWS, MS Azure, Google Cloud and more, have in fact solved the same issues. They have developed a cloud computing infrastructure that can grow efficiently to address customer demands both in terms of resources and services.

Inspired by hyperscalers, some disruptive vendors (such as DriveNets) have taken the same path and adapted it to networks.

In fact, AT&T was the first leading service provider to drive the industry towards this evolution. AT&T is transforming its network with a software-based, cloud-native solution: AT&T’s IP core is powered by DriveNets Network Cloud solution. Many other service providers around the world are following.

It’s time to clarify how a cloud-native, disaggregated network solution solves current network scalability issues.

The compute infrastructure: easy to scale, easy to operate, fast to deploy new services

First, let’s go back to the compute infrastructure that cloud giants have built. Such infrastructure is mainly based on three technological choices:

- Hardware standardization: removes all application-specific, proprietary hardware, and use instead only standard components (servers, switches, memory, storage…) across all applications

- Virtualization technology: creates an abstraction layer over hardware components to build a shared pool of resources for the application layer

- Cloud-native software: enables microservices and container-based architecture, automated by orchestration systems (i.e., Kubernetes)

As such, growing and operating this compute infrastructure is done in different ways:

- Scale-up: Add (or remove) resources like server’s RAM to increase infrastructure capacity as long as hardware devices allow it.

- Scale-out: Add (or remove) hardware devices to existing clusters to increase infrastructure capacity. The new cluster’s devices are managed as single entities to ease and automate operations.

- Auto-scaling: Perform scheduled dynamic or predictive-based provisioning of compute instances on the shared infrastructure – based on provisioning policies, live monitoring of instances and infrastructure resources and workload load balancing.

Once running on the compute infrastructure, container-based applications can be deployed and scaled easily through container orchestration systems and auto-scaling systems, as delivered by cloud providers. Containers are spined up based on usage demand or application-related policies to adapt to changing traffic patterns.

With cloud computing, deploying new revenue-generating applications became fast, with high-resource efficiency.

Let’s go back to networking and network-centric services.

Many professionals in the field looked at the cloud compute evolution, and wondered if their services could also be “cloudified” and benefit from the same scalability and operational advantages.

Then, the Network Function Virtualization, or NFV, trend started. But failed, thanks to the poor performance of running a network-centric workload on standard servers. In fact, in its early years, DriveNets tried it as well, but failed like others.

A few years ago, the real solution finally came to light. With the availability of high-performance merchant silicon, networking white boxes could be built to support intensive data plane requirements. Distributed architectures emerged, as NOS vendors were able to stack these boxes and build routing solutions for the new architecture. This is the basis for the OCP Distributed Disaggregated Chassis model (DDC), and DriveNets Network Cloud.

Network Cloud: Cloud scalability for network-intensive workloads

Global internet bandwidth usage spiked 34% from 2019 to 2020, and a further 29% in 2021 to 786 Terabits per second (Tbps), thus making the reduction of cost-per-bit almost impossible for service providers (The Global Internet Phenomena, January 2022, Sandvine).

In parallel, activating a simple enterprise VPN access can take weeks, while launching new services can take up to months.

The ultimate and more effective solution is to take the hyperscalers’ approach and technology choice of compute infrastructure, and adapt it to service providers’ networks.

That’s where network cloud solutions come into play.

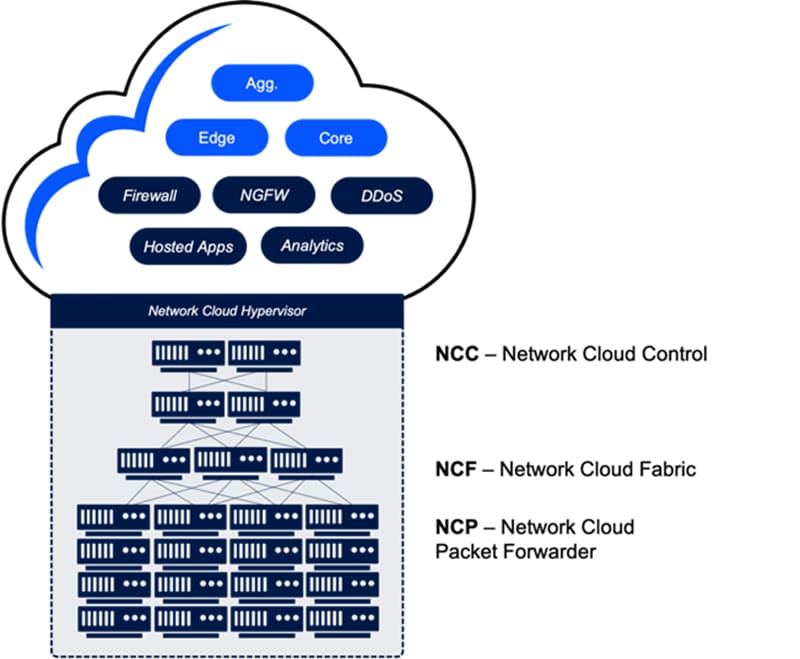

Such solutions (such as DriveNets Network Cloud) offer a networking-optimized, cloud-native, multiservice, shared infrastructure that addresses the growing bandwidth demand and the need for fast, new revenue-generating services. Just like a cloud computing infrastructure.

DriveNets Network Cloud solution

Vis-à-vis the integrated solution setup, DriveNets Network Cloud architecture provides optimal, efficient scalability:

Network Cloud | Integrated chassis | |

| Capacity scale-out |

|

|

| Capacity scale-up |

|

|

| Service scale-out |

|

|

| Orchestration |

|

|

Network scalability is all about growing network capacity with resource and operational efficiency, while deploying new (and ideally, many) services quickly. Inspired by the hyperscalers, the open, disaggregated, and distributed DriveNets Network Cloud architecture solves it in the best way.

It’s time to build the future of networks, now!

Download White Paper

DriveNets Multiservice - Maximizing Infrastructure Utilization