NeoCloud Case Study: Highest-Performance Unified Fabric for Compute, Storage and Edge

WhiteFiber’s Challenge

Until recently, WhiteFiber relied only on InfiniBand for its AI cluster back-end networks. However, driven by a growing need for greater flexibility and vendor diversity, the company began exploring Ethernet-based alternatives. As a customer-centric provider, WhiteFiber optimizes performance across the entire stack – from data centers to compute, storage, networking, and backbone. WhiteFiber’s primary challenge was adopting Ethernet without compromising the high GPU performance previously delivered by InfiniBand.

Initial testing confirmed that traditional Ethernet solutions struggled under demanding AI workloads, leading to network congestion, packet loss, and unreliable performance. It became clear that traditional Ethernet wasn’t inherently suited for these large-scale AI workloads demands. WhiteFiber needed the best of both worlds for its back-end network – the operational flexibility of standard Ethernet combined with the performance characteristics of InfiniBand.

DriveNets Stood Out from the Crowd

When WhiteFiber evaluated various alternatives, DriveNets Network Cloud-AI emerged as the ideal solution. Its scheduled fabric approach leverages standard Ethernet while delivering predictable and lossless connectivity that translated to high job completion time (JCT) performance right “out of the box,” with minimal network fine-tuning.

Beyond high performance, DriveNets stood out for two key capabilities:

- Delivers lossless, unified fabric for large-scale AI clusters, connecting storage and compute on a single fabric

- Uses fabric-level traffic isolation to ensure consistent, high performance in multi-tenant environments that dynamically adapt to any AI model

Solution

WhiteFiber deployed DriveNets Network Cloud-AI for its back-end network, unifying both compute and storage under a single fabric.

Supported by the DriveNets Infrastructure Services (DIS) team, the deployment process was fast and required minimal fine-tuning.

The DriveNets solution delivered higher collective communications performance (shown by NCCL testing of bus bandwidth), exhibiting a significant improvement compared to other Ethernet solutions tested. This translates to better job completion times for end users.

DriveNets’ scheduled fabric technology minimizes congestion and jitter, providing predictable connectivity with consistent low latency and nanosecond failover recovery. The platform’s end-to-end virtual output queueing (VOQ) offers inherent traffic isolation, effectively mitigating multi-tenancy issues like the “noisy neighbor” effect.

Project key requirements

- Unified fabric:leverage the performance and operational simplicity of a unified fabric solution for storage, compute, and edge connectivity

- High performance at scale: build a 512 GPU cluster with uncompromised GPU performance

- Fast and simple deployment: achieve the fastest path to optimal JCT, with rapid deployment and minimal network fine-tuning

- Standard Ethernet-based solution: enjoy the benefits of open standard Ethernet protocol

- Multi-tenant support: serve multiple tenants leveraging a mature isolation capability

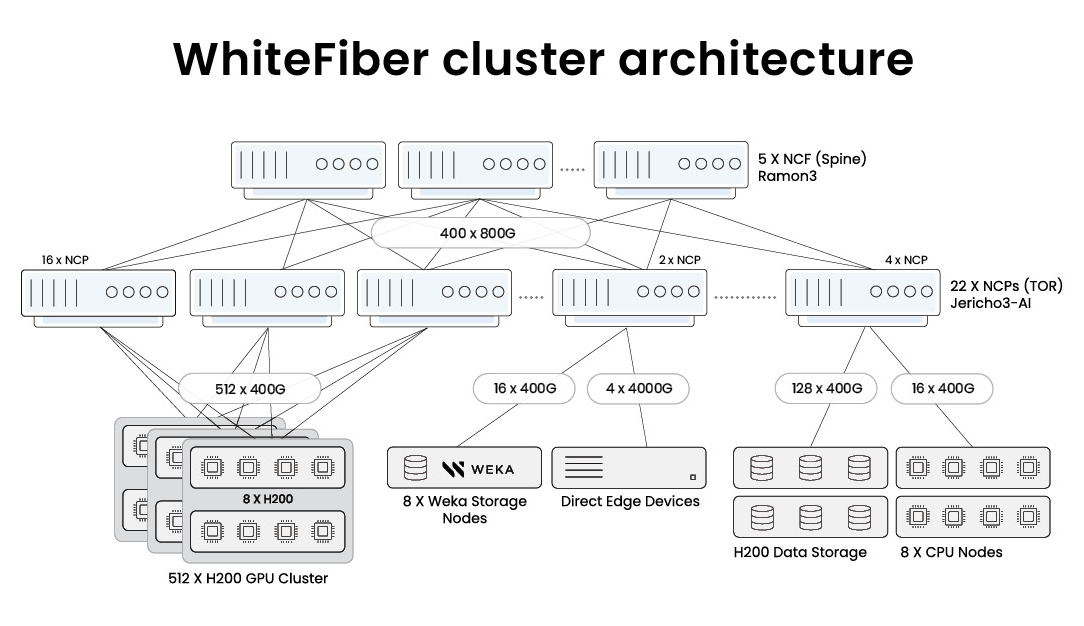

Deployment details

- Storage: 8 Weka storage nodes

- Hardware: cluster of 22 NCPs (top-of-rack (ToR) switches) and 5 NCFs (fabric switches), built with Accton NCP5 and NCF2 white boxes, powered by Broadcom Jericho3AI and Ramon3 chipsets

- Compute: 512 Nvidia H200 GPUs and 8 CPU nodes

- Software: DriveNets Network Cloud software orchestrating and managing the entire cluster fabric

Results

WhiteFiber successfully met its performance and operational objectives with DriveNets Network Cloud-AI, achieving significant benefits:

- High performance:: achieved validated JCT improvements over alternative Ethernet solutions

- Unified fabric:: successfully integrated compute, storage, and edge devices under a single fabric, simplifying operations compared to traditional segmented AI infrastructures

- Rapid deployment:: enjoyed high performance from day one, with minimal network fine-tuning, leading to accelerated time-to-value

- Open architecture: implemented solution built on widely recognized Ethernet protocol and compatible with any NIC, GPU, and optics hardware components

- Enhanced support: enjoyed DriveNets DIS team’s comprehensive involvement and end-to-end support, facilitating a smooth and rapid deployment as well as simplified integration

“We were able to deploy Network Cloud-AI with very short lead time and exceptionally fast installation time, getting our AI data center up and running quickly, which was critical to address rapidly growing market demand.”

Tom Sanfilippo, CTO, WhiteFiber

Summary

WhiteFiber realized that success as an AI infrastructure provider goes beyond simply placing GPUs in a data center. It requires delivering consistently high performance, effectively managing multiple tenants across multiple locations, and ensuring operational simplicity and efficiency. This pushed WhiteFiber to search for a more flexible and open solution without compromising performance.

DriveNets Network Cloud-AI provided a powerful Ethernet-based networking alternative to InfiniBand. It exceeded WhiteFiber’s performance goals, enabled rapid and simple deployment without vendor lock-in, and allowed storage and compute traffic to be unified onto a single network using its scheduled fabric architecture.

As a result, WhiteFiber improved performance across all available resources, simplified its operations, and gained the tools needed to support future growth, positioning itself as a leader in AI infrastructure delivery.

Talk with our AI Experts

Additional Neocloud GPU-as-a-Service (GPUaaS) Infrastructure Resources

- Scaling AI Workloads Over Multiple Sites through Lossless Connectivity

- Resolving the AI Back-End Network Bottleneck with Network Cloud-AI

- Meeting the Challenges of the AI-Enabled Datacenter: Reduce Job Completion Time in AI Clusters

- How is DriveNets revolutionizing network infrastructure for AI and service providers?

- Why InfiniBand Falls Short of Ethernet for AI Networking

- Fastest AI Cluster Deployment Now a New Industry Requirement

- CloudNets-AI – Performance at Scale