White Papers

Fabric-Scheduled Ethernet guarantees lossless connectivity for a large-scale server array running high-bandwidth workloads free of flow discrimination and with minimal...

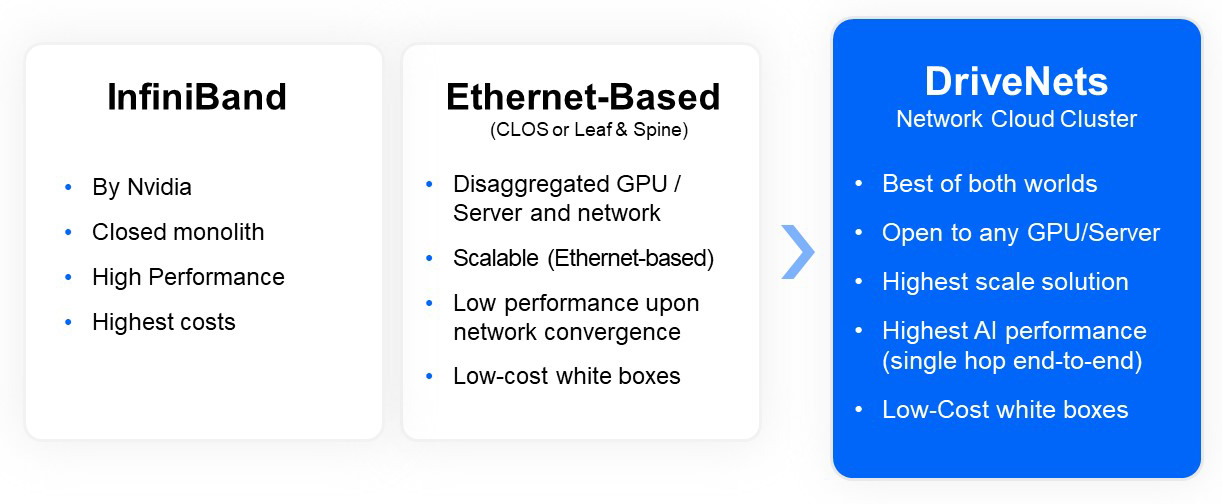

Read moreConnecting large GPU clusters for massive AI training is not simple. DriveNets’ AI fabric brings clear performance and scale advantages.

Traditionally, InfiniBand has been the technology of choice for AI fabric as it provides excellent performance for these kinds of applications.

InfiniBand drawbacks:

| Proprietary | Ethernet | |||||

|---|---|---|---|---|---|---|

| Solutions | InfiniBand & others | Clos Topology | Clos with Enhanced Telemetry | Single Chassis | DDC (Non DriveNets) | DriveNets Network Cloud-AI |

| Architectural Flexibility | Low Different technology for front-end and back-end |

High | High | High | High | High Seamless internet connectivity – Single technology for back-end and front-end Well-known protocol – 600M Ethernet ports per year Support of multiple applications Supports growth ASIC and ODM agnostic |

| Performance at Scale | High | Low Poor Clos performance |

Low-Medium Medium Clos performance Poor chassis scalability |

Low Poor chassis scalability |

High | High Up to 32Kx800Gbps Cell-based fabric 10-30% improved JCT performance: may lead to 100% system ROI as networking is 10% of the system cost |

| Trusted Ecosystem | Medium Closed solution, ASIC and HW vendor lock |

Medium – High Typically, not an open solution (vendor lock) |

Medium Not an open solution (vendor lock) |

Low Vendor lock |

Low Not field proven Not open |

High Based on a certified OCP concept Powering the world’s largest DDC Network (>52% of AT&T) Performance proven by US/CN hyperscalers |

The obvious alternative to InfiniBand is Ethernet. Yet Ethernet is, by nature, a lossy technology that results in higher latency and packet loss, and cannot provide adequate performance for large clusters.

Chassis or Clos AI fabric? What about both?

Distributed Disaggregated Chassis (DDC-AI) is the most proven architecture for building Ethernet-based open and congestion-free fabric for high-scale AI clusters.

Fabric-Scheduled Ethernet offers:

The best solution, in terms of both performance and cost, is the Fabric-Scheduled Ethernet:

Ultra Ethernet, however, relies on algorithms running on the edges of the fabric, specifically on the smart network interface cards / controllers (SmartNICs) that reside in the GPU servers.

This means:

For instance, take a move from the ConnectX-7 NIC (a more basic NIC, even though it is considered a SmartNIC) to the BlueField-3 SmartNIC (also called a data processing unit or DPU); this translates into a ~50% higher cost (per end device) and a threefold growth in power consumption.

This is also the case with another alternative to InfiniBand coming from Nvidia, the Spectrum-X solution (based on their Spectrum-4 and future Spectrum-6 ASICs).

White Papers

Fabric-Scheduled Ethernet guarantees lossless connectivity for a large-scale server array running high-bandwidth workloads free of flow discrimination and with minimal...

Read moreCloudNets Video

What's the difference between DDC, DES, and DSF? ...

Read moreBlog

Good Today but Better Tomorrow with Fabric-Scheduled Ethernet As data center demands grow exponentially, enterprises and cloud providers alike are...

Read more