Blog

AI workloads have moved beyond experimentation into deep production environments. Today, performance is no longer about peak FLOPS (floating-point operations...

Read more

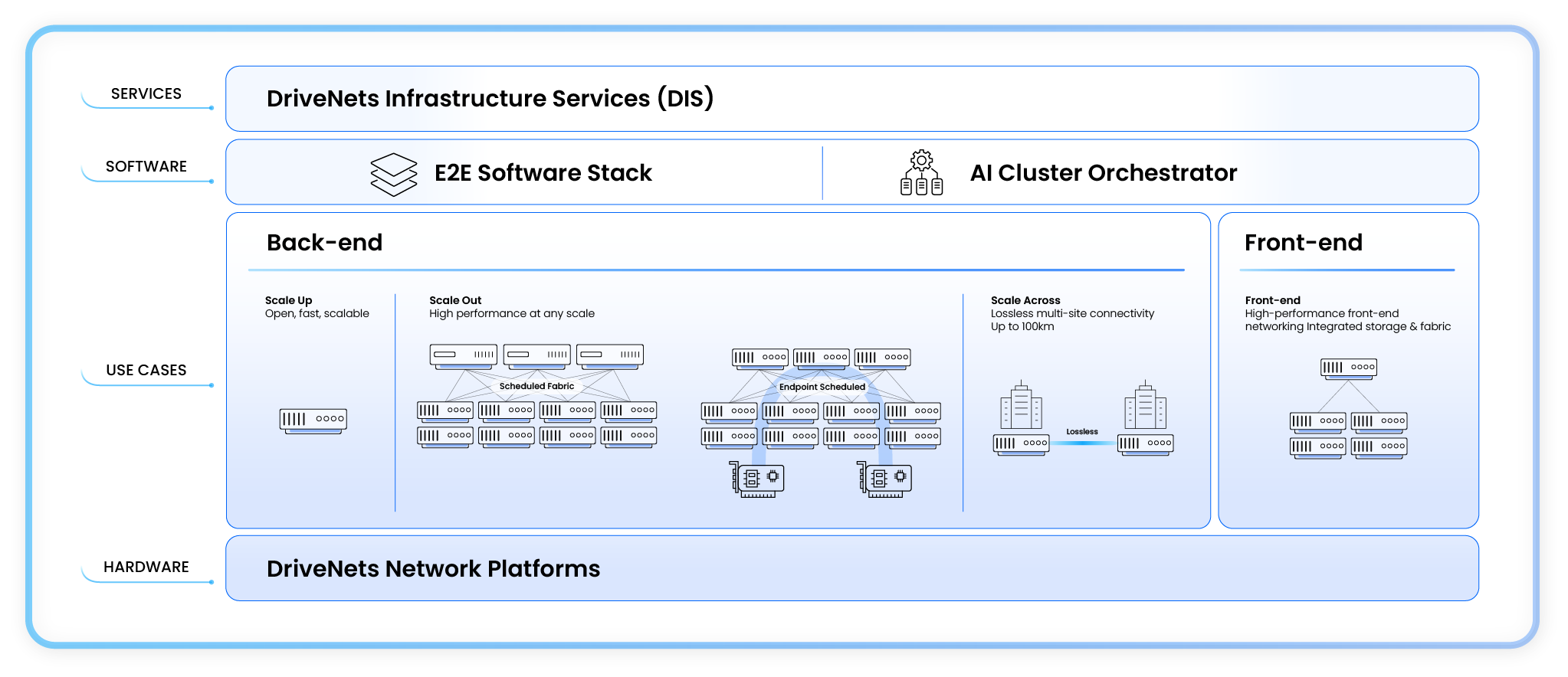

Highest-performance full-stack networking for AI Infrastructure

- hardware, software, orchestration

and deployment/optimization services

|

|

|

||||||||||||||||||||||

| Hardware Specifications | |||||||||||||||||||||||

|

|||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||

| Hardware Specifications | |||||||||||||||||||||||||

|

|||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||

| Hardware Specifications | |||||||||||||||||||||||||

|

|||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||

| Hardware Specifications | |||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||

| Hardware Specifications | |||||||||||||||||||||||||

|

|||||||||||||||||||||||||

DriveNets AI Fabric includes a solution for any part of the networking fabric, including:

DriveNets provides an end-to-end software stack, including:

DNOS: Network operating-system that runs on multiple hardware options

AI Cluster Orchestrator: a lifecycle orchestration system with engines tailored for:

End-to-end professional services for any GPU fabric deployment, including:

Bring up services

Software services

Blog

AI workloads have moved beyond experimentation into deep production environments. Today, performance is no longer about peak FLOPS (floating-point operations...

Read moreCase Studies

This case study explores WhiteFiber’s successful deployment of a large-scale AI cluster using DriveNets Network Cloud-AI as its back-end network...

Read moreWhite Papers

This paper outlines the networking challenges and key considerations for expanding AI clusters across geographically distributed sites....

Read more