CloudNets Video

What's the importance of latency in AI networks. AI networks introduce new challenges that need different treatments of latency....

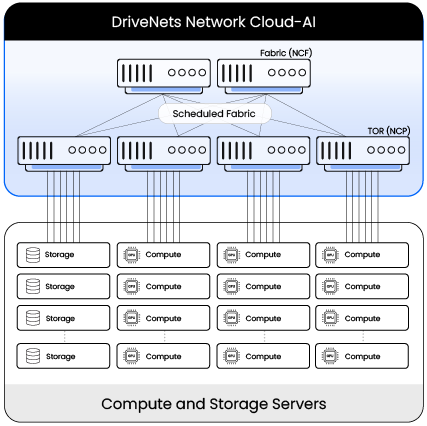

Read moreDriveNets Network Cloud-AI delivers high-performance, scalable and open networking solutions designed to meet the demands of AI workloads – it offers an Ethernet base alternative to InfiniBand. The Fabric Scheduled Ethernet (FSE) architecture provides lossless, low-tail-latency, and scalable fabric that efficiently interconnect any number of AI accelerators. The same Ethernet based fabric can be used to unify the compute and the storage networks for highest performance, efficiency and simplicity. With bandwidth capabilities ranging from 100Gbps up to 800Gbps and a clear evolution path to 1.6Tbps and beyond, DriveNets solution ensures support for future AI fabric requirements.

Network Cloud-AI Highlights:

AI enterprise backend networks demand exceptional performance and scalability to support the growing reliance on AI-driven workloads and data-intensive applications. DriveNets Network Cloud AI addresses these needs with a distributed, scheduled fabric architecture—scalable, cost-effective, and optimized for AI workloads that fits all Enterprise use cases including financial research, life science and pharmaceutical research, automotive, energy & utilities and high education. Its lossless Fabric Scheduled Ethernet technology based on distributed, disaggregated architecture enables enterprises to seamlessly adapt to evolving AI demands, ensuring high performance, no vendor lock, operational simplicity, and the ability to innovate without the constraints of proprietary network designs.

NeoCloud/GPUaaS infrastructure, either purpose built or reassigned from crypto-mining, requires exceptional scalability, flexibility, multi-site and multi-tenant support to accommodate diverse cloud-native AI workloads. DriveNets Network Cloud AI delivers these capabilities through its innovative scheduled-fabric architecture — optimized for AI-driven environments. With the ability to host multiple tenants without fine-tuning, ensure resource isolation between tenants – avoiding the noisy neighbor effect, and supporting remote, multi-site deployments, it simplifies operations while providing consistent performance. Built on lossless scheduled Ethernet technology and a distributed, disaggregated design, DriveNets empowers NeoCloud providers to scale effortlessly and innovate without the limitations of traditional network infrastructures.

AI hyperscaler networks demand unparalleled scalability, performance, and simplicity to support the needs of very-large-scale GPU clusters. DriveNets Network Cloud AI rises to this challenge with a software-driven architecture that supports extremely large GPU cluster sizes, leveraging Ethernet technology to ensure seamless integration and operation. Unlike traditional solutions, DriveNets eliminates the need for specialized knowledge from technical staff, simplifying deployment and management. It’s easy-to-scale, distributed, disaggregated design enables hyperscalers to expand capacity effortlessly while maintaining optimal performance and efficiency—empowering innovation without the limitations of proprietary or complex networking systems.

Best performance solution for Hyperscalers AI-fabric

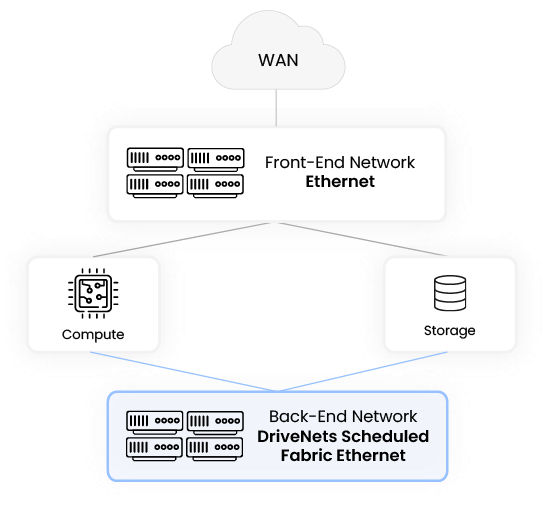

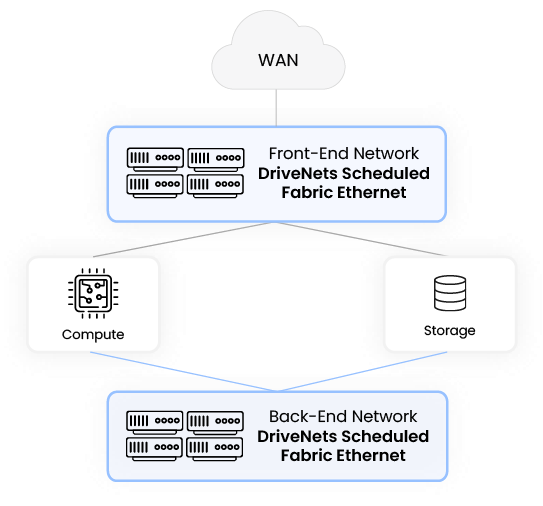

Backend networking in AI clusters refers to the interconnect infrastructure that facilitates internal communication between AI accelerators (such as GPUs) within a data center. This is a critical piece of the AI cluster infrastructure as it accommodates the sensitive traffic enabling efficient parallel processing and data sharing

DriveNets Network Cloud-AI transforms backend networking by combining the flexibility of Ethernet with the high-performance characteristics required for AI workloads. Unlike traditional Ethernet solutions, Network Cloud-AI leverages fabric-scheduled Ethernet, an advanced architecture that ensures lossless and predictable network performance while optimizing load balancing and latency. By eliminating packet loss and minimizing GPU idle time, DriveNets Network Cloud-AI optimizes job completion time (JCT)—outperforming both standard Ethernet and proprietary InfiniBand technologies. This next-generation approach enables seamless scalability, cost efficiency, and superior AI workload acceleration, making it the ideal choice for AI-driven data centers.

Frontend networking in AI/HPC clusters refers to the network infrastructure that manages external data traffic between AI workloads and users, applications, or other services. It connects the AI cluster to the broader data center, cloud services, or enterprise systems. Frontend networking must provide high bandwidth, low latency, and secure connectivity to ensure seamless interaction between AI models and end-users or business applications.

Storage networking in AI clusters is responsible for handling the massive data transfer between the AI compute nodes and external storage systems. For AI workload, unlike typical HPC implementations, this is a critical infrastructure as this traffic is intense and insufficient performance of the storage fabric will result in poor overall workload performance.

DriveNets Network Cloud-AI provides a unified solution for both networking fabrics, sharing the same Ethernet-based technology, architecture and actual implementation with the back-end fabric.

CloudNets Video

What's the importance of latency in AI networks. AI networks introduce new challenges that need different treatments of latency....

Read moreBlog

If it were up to my twins, they would spend all their waking hours on their smartphones. That’s why I...

Read moreBlog

In today’s competitive artificial intelligence (AI) landscape, hyperscalers and large enterprises are rapidly recognizing the critical need for open, scalable,...

Read more