|

Getting your Trinity Audio player ready...

|

But this is true not only for NeoCloud providers – all enterprises and AI developers are in a frantic sprint to deploy AI capabilities before their rivals. This has turned the rapid deployment of AI GPU clusters into a critical demand.

And while getting the hardware in place is one thing, it’s the network that often causes delays. Complex configurations and endless tweaking can turn network deployment into a slow and painful process.

Achieve high-performance AI clusters

So, how can we achieve high-performance AI clusters with the fastest deployment time? The answer lies in understanding and solving three key problems in AI networking:

- Load balancing: Distributing traffic evenly across thousands of GPUs is crucial for maximizing utilization and cluster performance. Poor load balancing can lead to overloaded GPUs while others remain idle, wasting valuable resources. Achieving optimal load balancing that adapts to the dynamic nature of AI workloads is challenging, but when done right, it can proactively reduce congestion and improve efficiency and performance.

- Congestion: AI workloads produce massive, bursty data flows with complex traffic patterns that pose significant management challenges. A common example is when multiple GPUs simultaneously send data to a single destination (incast), overwhelming network switches and resulting in delays and reduced GPU efficiency. Preventing congestion ensures smooth data flow and maximized cluster performance.

- Traffic isolation: In multi-tenant environments, or when storage and compute traffic share the same fabric, isolating traffic is essential. This prevents one element from impacting the performance of others – for example, the noisy neighbor effect. Achieving effective traffic isolation requires granular control over network resources and sophisticated quality-of-service (QoS) mechanisms.

While these aren’t the only challenges facing AI workload builders and operators, they represent key time-consuming issues that can lead to endless tuning – a problem that is especially common in multi-tenant environments.

Known AI infrastructure industry solutions

When it comes to building AI infrastructure, NeoCloud providers and other enterprises have a number of options:

Traditional Ethernet

Ethernet’s familiarity and cost-effectiveness attract many enterprises and NeoClouds. However, standard Clos networks, basic congestion control mechanisms (e.g., ECN, PFC), and overlay routing protocols (e.g., ECMP) are inadequate for the dynamic demands of AI clusters. Even powerful chassis-based solutions simply cannot scale to meet the demands of modern AI infrastructure with its massive GPU counts.

Advanced proprietary solutions

The challenges of AI are not new; the HPC market has struggled with similar issues for many years. Nvidia’s InfiniBand was previously the dominant solution, offering exceptional performance. However, it lacks standard Ethernet support, has limited multi-tenant capabilities, and requires complex, ongoing tuning and configuration by specialized skilled teams to achieve optimal performance.

Recognizing these limitations, Nvidia has been promoting Spectrum-X, which delivers high performance while supporting Ethernet, traffic isolation, load balancing, and congestion control. While a significant improvement, Spectrum-X still relies on overlay ECMP for traffic isolation and ROCEv2, which again necessitates complex configurations.

The Ultra Ethernet Consortium (UEC) aims to address the drawbacks of the ROCEv2 network protocol and streamline deployment, but its solution is not yet available.

Scheduled fabric

NeoCloud providers and other enterprises who build large scale AI clusters can already utilize a lossless and predictable Ethernet solution with a Disaggregated Distributed Chassis (DDC) scheduled fabric. This solution effectively addresses the three challenges mentioned earlier by using:

- Cell spray: offering load blancing while distributing traffic evenly across the fabric, preventing bottlenecks, and ensuring all GPUs have access to necessary data

- End-to-end virtual output queuing (E2E VOQ): preventing congestion and isolating traffic

The combination of E2E VOQ and cell spray creates a plug-and-play network environment with predictable and lossless connections. Scheduled fabric uses cell spray and E2E VOQ inherently without the need for complex tuning and ongoing configuration typically required by other solutions.

DriveNets AI infrastructure – no tuning required

The new AI boom has pushed NeoCloud providers and other enterprise companies into a race for fast deployment of AI clusters. After overcoming the availability constraints of GPUs and optics, these organizations realize that interconnecting large-scale GPU clusters requires complicated and advanced networking solutions that demand complex and ongoing tuning and configurations. Although legacy solutions can deliver the required performance, they still require time-consuming tuning by skilled teams.

Scheduled fabric, on the other hand, offers the required solution in a timely manner. DriveNets Network Cloud-AI, based on DDC’s scheduled fabric, utilizes cell spray and E2E VOQ to offers a high-performance AI networking fabric out of the box, without complex tuning or additional hardware.

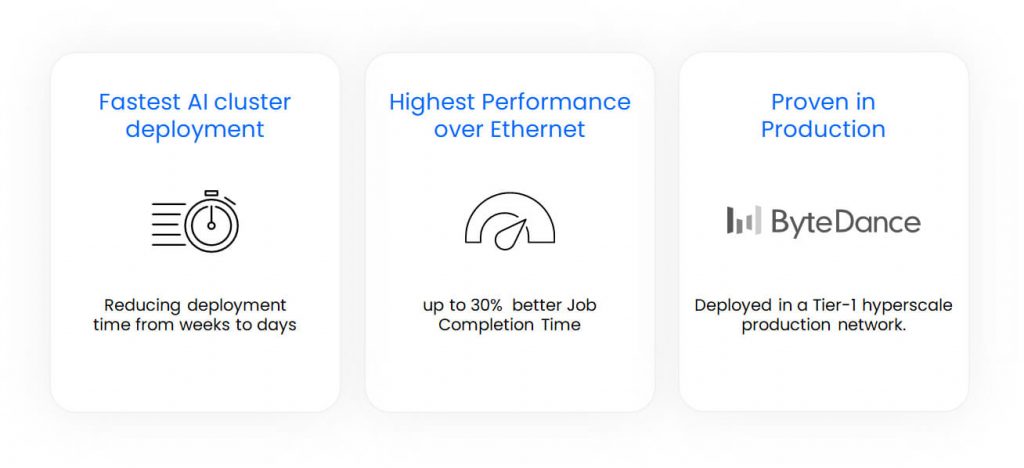

DriveNets Network Cloud-AI enables the fastest deployment of AI clusters – reducing deployment time from weeks to days. On top of that, it delivers up to a 30% improvement in job completion time (JCT) performance over Ethernet, as proven in production with a Tier-1 hyperscale network.

Additional Neocloud GPU-as-a-Service (GPUaaS) Infrastructure Resources

- NeoCloud Case Study: Highest-Performance Unified Fabric for Compute, Storage and Edge

- Scaling AI Workloads Over Multiple Sites through Lossless Connectivity

- Resolving the AI Back-End Network Bottleneck with Network Cloud-AI

- Meeting the Challenges of the AI-Enabled Data Center: Reduce Job Completion Time in AI Clusters

- How is DriveNets revolutionizing network infrastructure for AI and service providers?

- Why InfiniBand Falls Short of Ethernet for AI Networking

- Fastest AI Cluster Deployment Now a New Industry Requirement

- CloudNets-AI – Performance at Scale

eGuide

AI Cluster Reference Design Guide