|

Getting your Trinity Audio player ready...

|

However, building an AI cluster involves more than just stacking GPUs. It requires deep expertise in compute architecture, lossless networking, and optimized storage, all seamlessly integrated to deliver peak performance. An AI cluster is a strategic investment that must be carefully and correctly architected.

What are the challenges behind GPU cluster deployment?

A typical AI or HPC cluster includes hundreds to hundreds of thousands of GPUs, spread across compute nodes and linked by high-bandwidth, low-latency networks. While it may sound straightforward, this introduces multiple challenges:

- Complicated networking: Modern AI workloads are communication-intensive and require predictable, lossless connectivity between GPUs. This often drives organizations toward proprietary solutions like InfiniBand or high-bandwidth Ethernet fabrics. Any packet loss or retransmission leads to performance drops and idle GPUs, which are very expensive inefficiencies.

- Storage and data pipelines: Feeding data fast enough to keep GPUs utilized is critical. Traditional storage and transmission solutions often can’t keep up, leading to idle GPU time and reduced performance.

- Power and space: Large GPU clusters are power-hungry and require a lot of space. These constraints can influence and limit data center design and location considerations while increasing costs.

- Vendor lock-in: To avoid complexity, many organizations turn to proprietary, single-vendor solutions. While this may seem simpler upfront, it often leads to higher costs, reduced flexibility, and a dependency on one vendor’s roadmap.

- Multi-tenancy: In shared environments, like research labs or AI-as-a-service offerings, tenancy isolation is critical. Preventing one user’s demanding job from impacting other jobs is a major architectural challenge.

Given this complexity, it’s no surprise that enterprises often seek external expertise when planning or deploying GPU clusters. A poorly designed AI cluster can lead to higher costs, project delays, and most importantly, underperforming workloads that fail to deliver on their strategic promise.

Where’s the real bottleneck? It’s the network, stupid!

There’s no one-size-fits-all blueprint for building large-scale AI infrastructure. The right architecture depends on many factors—workload types, model complexity, deployment environment, and performance goals. Each approach comes with its own trade-offs in flexibility, scalability, and costs.

Successfully navigating these choices requires deep infrastructure and workload engineering expertise. While compute and storage are critical, networking often proves to be the most important—and the most complex—piece of the puzzle. It’s the layer that interconnects everything else: compute nodes, storage systems, and data pipelines. And because AI workloads are extremely communication-intensive, even small inefficiencies in the network can ripple across the entire system, impacting training speed, GPU utilization, and overall performance. Unlike other components, networking can’t be adjusted or scaled independently after deployment—it must be right from the start.

What is DriveNets Infrastructure Services (DIS)?

This is where DriveNets Infrastructure Services (DIS) come in. The DIS team offers expert-driven services designed to handle the unique demands of building AI and HPC infrastructure. DIS experts offer end-to-end support, from initial architecture design to full operational enablement.

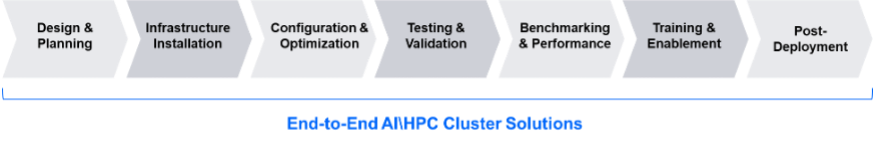

Leveraging the industry’s highest-performance Ethernet-based AI/HPC networking solution, the DIS team supports every stage of your infrastructure lifecycle:

- Design and architecture: DIS experts assist with selecting the optimal networking architecture and components based on customer-specific workloads and performance goals.

- Procurement and sourcing: Leverage DriveNets’ ecosystem of trusted partners to secure the right hardware and software for meeting any customer need.

- Deployment and tuning: The DIS team takes full ownership of installation, integration, and performance optimization—including the configuration of advanced, lossless network fabrics.

- Enablement and training: Equip the customer’s team with the knowledge needed to manage, operate, and scale the environment independently.

Backed by DriveNets’ deep networking expertise and proven experience in some of the world’s largest AI deployments, DIS experts help enterprises and NeoClouds accelerate time-to-value, reduce risk, and unlock the full potential of their strategic infrastructure.

How can you build smarter AI Infrastructure right from the start?

Building AI infrastructure is about more than just stacking GPUs. It requires smart architecture and expert execution. In a competitive landscape where performance and time-to-market are everything, going it alone is a significant risk.

Whether you are building your first AI cluster or scaling to the next cluster, the DriveNets DIS team ensures your AI infrastructure is optimized right from the start.

Key Takeaways

- AI clusters require more than just GPUs—networking, storage, and infrastructure must work in sync to avoid performance bottlenecks.

- Traditional Ethernet networks can slow down AI training due to latency, congestion, and packet loss.

- Power and space limitations are major constraints when scaling large AI clusters in data centers.

- Vendor lock-in limits flexibility and scalability, making open, standards-based solutions more attractive for long-term growth.

- DriveNets Infrastructure Services (DIS) helps organizations design, deploy, and scale AI clusters more efficiently with end-to-end support.

Frequently Asked Questions

- What makes AI cluster deployment so challenging?

It involves complex networking, data bottlenecks, power and space constraints, and the risk of vendor lock-in. - How can I avoid performance bottlenecks in AI clusters?

Use a lossless, high-throughput network fabric and ensure your data pipeline can keep up with GPU processing speeds. - What is DriveNets Infrastructure Services (DIS)?

DIS offers end-to-end support—design, sourcing, deployment, and training—for streamlined AI cluster rollouts.

Related content for AI networking infrastructure

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

NeoCloud Case Study

Highest-Performance Unified Fabric for Compute, Storage and Edge