|

Getting your Trinity Audio player ready...

|

Embracing Open AI Infrastructure with DriveNets

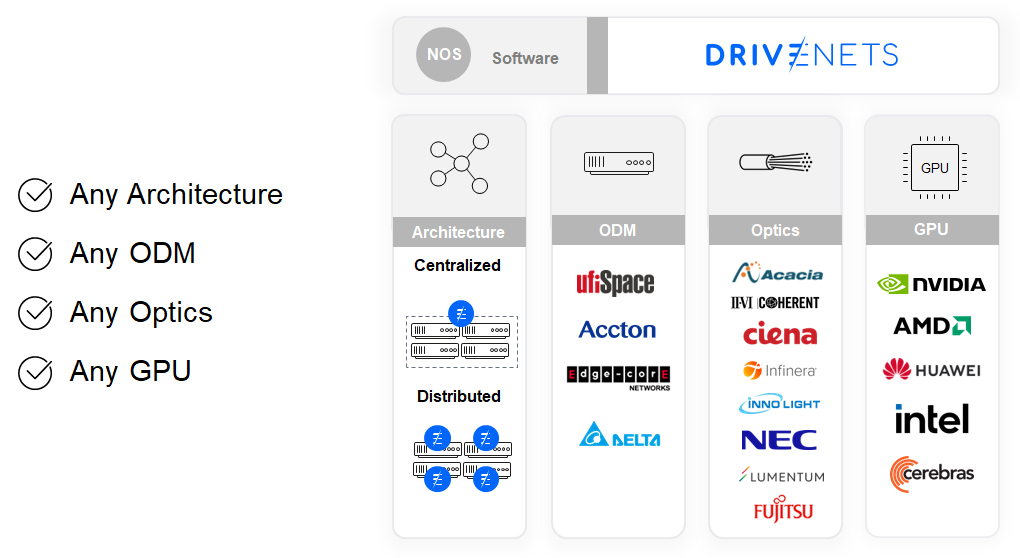

DriveNets Network Cloud-AI offers an alternative with its open standard architecture, enabling organizations to break free from traditional constraints and reduce risk with supply chain diversity. Here’s how DriveNets fosters an open AI infrastructure…

Hardware Flexibility

DriveNets provides unmatched flexibility, allowing hyperscalers and enterprises to customize their infrastructure. DriveNets enables:

GPU, ASIC, and ODM agnosticism: supporting multiple Ethernet NICs/DPUs, diverse GPUs/accelerators, and varied networking ASICs from multiple white box ODM providers, ensuring cost efficiency and scalability

Diverse optic choices: compatible with optics from multiple vendors (e.g., Fujitsu, Acacia, Ciena, Infinera and others), mitigating supply chain bottlenecks and ensuring consistent delivery timelines

Supply Chain Diversity

DriveNets’ open ecosystem promotes supply chain diversity, reducing reliance on single vendors and enabling architectural flexibility through multiple hardware sources. By adhering to the Open Compute Project (OCP) Distributed Disaggregated Chassis (DDC) specification, the DriveNets platform ensures true openness and interoperability.

Ethernet: New Standard for AI Networking

As AI and high-performance computing (HPC) workloads continue to evolve, Ethernet is emerging as the dominant AI networking solution. DriveNets is at the forefront of this transformation, as evidenced by its membership in the Ultra Ethernet Consortium (UEC) and commitment to open, multi-vendor Ethernet solutions.

Benefits of this shift include:

- Interoperability: Standardized architectures eliminate vendor lock-in.

- Simplified deployment: Ethernet-based solutions reduce complexity, enabling faster time-to-market (TTM) and time-to-deployment (TTD) while leveraging a common skillset in the market, eliminating the need for InfiniBand specialized expertise.

- Improved job completion time (JCT): DriveNets Network Cloud-AI also delivers up to a 30% improvement in JCT performance over Ethernet, as proven in production with a leading research firm network.

Simplifying AI Cluster Management

Configuring large AI clusters with thousands of GPUs can be daunting. DriveNets Network Cloud-AI addresses this challenge by minimizing the need for extensive AI cluster reconfiguration. The DriveNets solution enables flexible workload management, seamlessly transitioning between workloads while supporting diverse GPU and NIC vendors without the need for complex tuning. Unlike other Ethernet-based solutions, which often require extensive tuning and configuration adjustments (buffer sizes, PFC, ECN etc.), DriveNets Network Cloud-AI minimizes the need for reconfiguration, allowing for seamless transitions between workloads.

Additionally, the solution provides unified network management by offering a single network for both backend and storage connectivity. Storage plays a crucial role in AI workloads as it directly impacts performance. So when building a cluster infrastructure, it is essential to ensure perfect alignment of the storage network as well.

DriveNets Network Cloud-AI enhances the storage network by carrying both storage and compute traffic on the same fabric without affecting each other (the “noisy neighbors” problem). This eliminates the need for special overlay technologies (e.g. VXLAN encapsulation), reduces latency, and simplifies the overall management of AI clusters.

Open AI infrastructure is a must

In the race to deploy AI capabilities, open infrastructures are no longer optional – they are imperative. DriveNets’ open standard platform empowers hyperscalers and large enterprises to break free from Nvidia’s InfiniBand lock-in, streamline hardware and optics procurement, and enable a robust supply chain to eventually accelerate their AI journey. By embracing Ethernet as the backbone of AI networking and leveraging a disaggregated, open ecosystem, DriveNets enables faster deployment, improved performance, and unparalleled flexibility.

Related content for AI networking architecture

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

eGuide

AI Cluster Reference Design Guide