|

Getting your Trinity Audio player ready...

|

Leading cloud and AI infrastructure providers are increasingly shifting from proprietary InfiniBand to Ethernet – a move driven by cost, simplicity and ecosystem flexibility. Yet traditional Ethernet falls short in one critical area – delivering the deterministic, lossless performance that AI workloads require. That’s where DriveNets comes in.

What is the Problem with Traditional Ethernet for AI Workloads?

Ethernet was never designed with AI in mind. While it’s ubiquitous and cost-effective, its packet-based, best-effort nature leads to significant challenges when applied to AI clusters:

- Latency sensitivity: AI workloads, especially during distributed training, are extremely sensitive to jitter and latency. Standard Ethernet provides no guarantees, often introducing variability that slows down performance

- Congestion: With multiple AI jobs running concurrently and large-scale parameter updates being exchanged, Ethernet links often face head-of-line blocking, congestion, and unpredictable packet drops.

- Inconsistent performance: Ethernet switches use shared queues, leading to noisy-neighbor effects that may degrade performance for specific port when a neighbor port is under load.

To resolve these issues, hyperscalers have traditionally turned to expensive and proprietary InfiniBand solutions. However, DriveNets offers a radically different approach – leveraging standard Ethernet hardware but rearchitecting its behavior using innovative scheduling techniques.

DriveNets Network Cloud-AI – Lossless Ethernet Built for AI

DriveNets Network Cloud-AI is a high-performance, AI-native networking solution that transforms Ethernet into a predictable, lossless, and scalable fabric. The secret lies in combining cell spraying with virtual output queuing (VOQ) to create a scheduled fabric – an architecture that mimics the benefits of circuit-switched networks without sacrificing the openness and economics of Ethernet.

How It Works – Cell Spraying + VOQ = Scheduling

Cell Spraying – Foundation of Load Distribution

Rather than transmitting large, variable-length packets, Network Cloud-AI breaks data into uniform, fixed-size cells, which are then sprayed across multiple parallel paths in the network. This technique ensures that no single link is overwhelmed, even during bursts of data movement, and eliminates “elephant flows” that can clog traditional Ethernet networks.

Spraying cells across multiple network paths helps:

- Smooth out traffic peaks thanks to perfect load balancing

- Ensure deterministic latency behavior

- Reduce the chance of congestion hotspots

It’s a simple yet powerful concept — by treating all traffic uniformly and slicing it into cells, the network behaves predictably even under extreme load.

Virtual Output Queuing (VOQ) – Eliminating Head-of-Line Blocking

In traditional Ethernet switches, a single congested output port can block the entire input queue, leading to wasted bandwidth and poor performance. Network Cloud-AI solves this with multiple virtual output queues, where each ingress port maintains a dedicated queue for every egress port.

VOQ ensures that traffic is queued precisely where needed and allows the scheduler to make intelligent, per-destination forwarding decisions. Combined with cell spraying, VOQ guarantees fairness and prevents traffic from varying sources interfering with each other – a crucial requirement in AI training where workloads are tightly synchronized.

How End-to-End VOQ Works

An end-to-end VOQ system is a traffic management system that uses virtual output queues to provide a consistent level of service for traffic flows across a data center network. Virtual output queues are logical queues that are assigned to specific traffic flows. Each queue has a fixed size, and packets are only transmitted from a virtual queue when there is guarantee it will be delivered to its destination. This ensures that no traffic flow is starved of resources, even if the network is congested.

DriveNets’ end-to-end VOQ system uses a credit-based flow-control mechanism to ensure that the virtual queues do not overflow. When a packet is transmitted from a virtual output queue, the switch sends a credit to the source of the packet. This credit indicates how many more packets can be transmitted from the virtual queue before it overflows. The source of the packet must wait until it receives a credit before transmitting another packet.

Resulting Scheduled Fabric – Deterministic, Lossless Ethernet

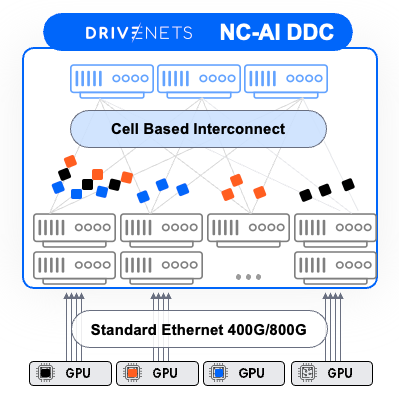

At the heart of Network Cloud-AI is a scheduled fabric built on DriveNets’ Distributed Disaggregated Chassis (DDC) architecture. This architecture enables centralized control and scheduling of data moving through the fabric

Instead of relying on reactive congestion mechanisms like ECN (explicit congestion notification) and PFC (priority flow control), Network Cloud-AI takes a proactive approach. Calculating optimal transmission schedules in advance, the DriveNets solution ensures that each cell knows exactly when and where to go. This creates deterministic behavior like time-division multiplexing (TDM), which provides predictable and lossless fabric.

By orchestrating cell movement across multiple paths, the scheduled fabric ensures:

- Zero packet loss

- Ultra-low tail latency

- Consistent throughput at scale

Why this Matters for AI?

AI training performance scales linearly only when the network can keep up with the GPUs. With Network Cloud-AI, DriveNets eliminates the typical network-induced delays and inconsistencies that slow down training jobs. This results in:

- Best GPU utilization

- Reduced training time and costs

- Seamless scaling across thousands of GPUs

Most importantly, the DriveNets solution is built on standard Ethernet hardware — meaning organizations don’t need to lock into proprietary technologies or face escalating costs.

Delivering the performance required by high-end HPC/AI networks

DriveNets Network Cloud-AI represents a fundamental shift in how we think about Ethernet in the age of AI. By transforming Ethernet into a lossless, deterministic transport through a combination of cell spraying, VOQ, and centralized scheduling, Network Cloud-AI delivers the performance required by high-end HPC/AI networks while preserving the openness and flexibility of Ethernet.

As AI models continue to scale, so too must the networks that power them. With Network Cloud-AI, Ethernet is finally ready for the AI era.

Key Takeaways

- Traditional Ethernet, designed for general-purpose networking, struggles with AI workloads due to its best-effort delivery model, leading to issues like latency variability, congestion, and packet loss. These shortcomings can hinder the performance of large-scale AI clusters.

- DriveNets’ Network Cloud-AI transforms Ethernet into a predictable and lossless fabric by implementing a scheduled architecture. This approach mimics the benefits of circuit-switched networks, ensuring deterministic performance without sacrificing the openness and cost-effectiveness of Ethernet.

- The solution employs cell spraying to distribute fixed-size data cells across multiple network paths, preventing congestion and ensuring load balancing. Combined with VOQ, which eliminates head-of-line blocking by maintaining dedicated queues for each output port, the network achieves fairness and prevents traffic interference—a crucial requirement for synchronized AI training.

- By eliminating network-induced delays and inconsistencies, DriveNets’ solution enhances GPU utilization and reduces training times. Built on standard Ethernet hardware, it allows organizations to scale AI clusters seamlessly without the need for proprietary technologies, supporting up to 32,000 GPUs in a single cluster.

Frequently Asked Questions

- Why is traditional Ethernet not ideal for AI workloads?

Traditional Ethernet relies on a best-effort delivery model, which can introduce latency, congestion, and packet loss—issues that severely impact the performance of distributed AI training. AI clusters require high-throughput, low-latency, and lossless communication between GPUs to maintain synchronization and maximize utilization. - How does DriveNets create a lossless Ethernet fabric for AI clusters?

DriveNets re-engineers Ethernet by introducing a scheduled, cell-based architecture. It breaks traffic into fixed-size cells that are sprayed across multiple paths, preventing congestion. Combined with Virtual Output Queuing (VOQ), this architecture ensures predictable, fair, and lossless data delivery—ideal for the demands of large AI workloads. - What are the benefits of DriveNets’ approach compared to proprietary AI fabrics?

Unlike proprietary solutions that require custom hardware, DriveNets’ AI fabric is built on standard Ethernet infrastructure, enabling scalability up to tens of thousands of GPUs. It provides deterministic performance, eliminates head-of-line blocking, and reduces training time—all while preserving the flexibility, openness, and cost advantages of Ethernet.

Related content for AI networking architecture

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

eGuide

AI Cluster Reference Design Guide