|

Getting your Trinity Audio player ready...

|

Remote direct memory access (RDMA) has been a very successful technology for allowing a graphics processing unit (GPU), tensor processing unit (TPU), or other accelerator to transfer data directly from the sender’s memory to the receiver’s memory. This zero-copy approach results in low latency and avoids operating system overheads. Because of this, network technology that supports RDMA is a fundamental component of AI training jobs today.

The RDMA over Converged Ethernet version 2 (RoCEv2) protocol was created for RDMA to run on IP and Ethernet networks. RoCEv2 has emerged as a leading scale-out transport protocol, promising high throughput and low latency. However, like any evolving technology, RoCEv2 is not without its challenges. While RoCEv2 is undeniably powerful, it relies heavily on specific mechanisms such as priority flow control (PFC) and retransmission techniques that introduce complexity and inefficiencies.

Three Key RoCEv2 Issues

Let’s dive into three critical areas that illustrate why RoCEv2 is good – but can be improved upon with a Disaggregated Distributed Chassis (DDC) fabric-scheduled Ethernet solution.

- Intense Buffering from PFC

One of the cornerstones of RoCEv2 is its reliance on PFC to create a lossless Ethernet environment. PFC allows for per-priority flow control, pausing traffic when congestion is detected on specific priority queues, which sounds ideal for preserving packet integrity in RDMA traffic. However, this approach requires significant buffering to handle paused traffic effectively and can lead to performance bottlenecks:- Buffer pressure: Switches and network devices need large buffers to absorb bursts of traffic while paused queues clear congestion. This buffer requirement grows as the network scales, leading to expensive hardware upgrades.

- Performance variability: Inconsistent buffer allocation between devices can lead to performance bottlenecks, especially in heterogeneous Clos environments.

- PFC Congestion Effects

While PFC enables lossless transmission, it can inadvertently cause multiple congestion effects:- PFC storms (congestion spreading): Continuous pause frames can lead to PFC storms, where entire sections of the network come to a halt, impacting critical applications. Moving beyond PFC and using enhanced congestion control algorithms like explicit congestion notification (ECN) combined with adaptive rate limiting can reduce reliance on pause frames and avoid PFC storms.

- Victim flows: These are flows with the same priority, unrelated to the source of congestion. They are negatively impacted by congestion and paused because they share the same priority queue.

- Congestion trees: Congestion at one point can cascade, creating congestion trees that affect large parts of the network.

- Deadlocks: In extreme cases, PFC can create circular dependencies, when multiple devices in a network continuously send PFC pause frames to each other. This causes deadlocks where multiple flows are perpetually paused, requiring manual intervention to recover.

- Go-Back-N Retransmission

RoCEv2 employs a Go-Back-N retransmission strategy, where any lost packet triggers the retransmission of that packet and all subsequent packets in the sequence, even if they were received successfully. This results in lower “goodput” and poor efficiency.

Though selective retransmission mechanisms (e.g. selective acknowledgment {SACK}) could allow improved efficiency, there are still significant drawbacks to retransmission:- Wasted bandwidth: Resending packets that have already been received consumes valuable network resources, reducing overall efficiency.

- Increased latency: The retransmission of multiple packets unnecessarily increases latency, especially in large-scale deployments with high packet rates.

Net-Net, RoCEv2’s congestion control and mitigation mechanism, relies on a combination of pause frames (via PFC) and retransmission timeouts to handle packet loss and congestion. This reactive approach to congestion mitigation introduces latency and inefficiency. Pausing flows inherently causes delays, which can significantly impact latency-sensitive applications such as AI model training. Additionally, the reliance on timeout mechanisms for detecting unacknowledged packets further worsens delays and can reduce throughput, especially during prolonged congestion scenarios.

Where Do We Go from Here?

While large lossless RoCE networks have been successfully deployed, they require careful tuning, operation, and monitoring to perform well without triggering the above-mentioned effects. However, this level of investment and expertise is not available to all hyperscalers and enterprises, while leading to a high total cost of ownership (TCO).

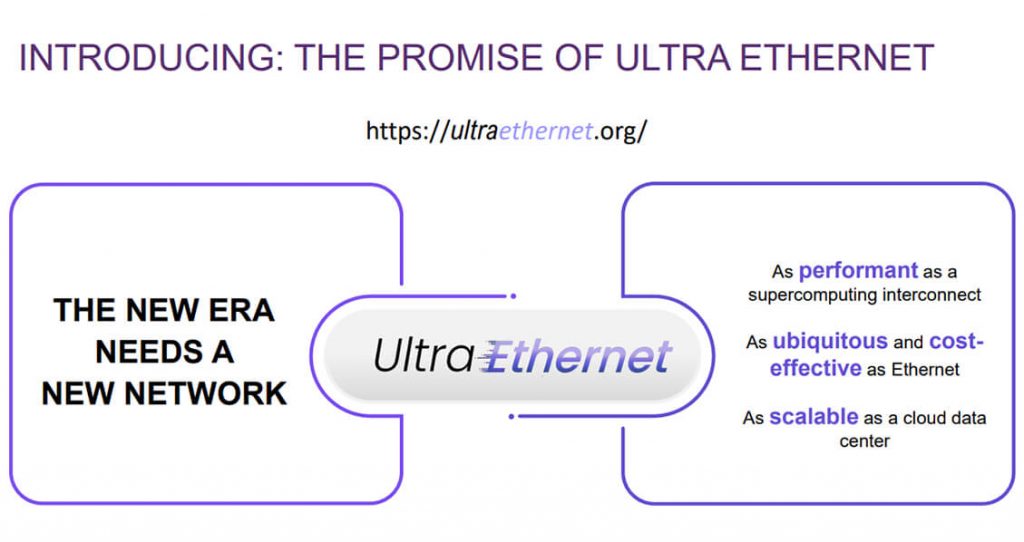

The Ultra Ethernet Consortium (UEC) aims to replace the legacy RoCE protocol with Ultra Ethernet Transport (UET). UEC believe it is time for a fresh start to “deliver an Ethernet-based open, interoperable, high-performance, full-communications stack architecture to meet the growing network demands of AI & HPC at scale.”

Source: SNIA.org

Source: SNIA.org

Until UEC develops and releases mature Ultra Ethernet solution, hyperscalers and enterprises can start today to leverage RoCEv2 over a lossless and predictable fabric-scheduled Ethernet.

Disaggregated architectures, combined with the virtual output queueing (VOQ) technique, enable AI backend networking fabrics to be both lossless and predictable. VOQ plays a critical role in avoiding congestion by ensuring that data packets are queued at the source and only sent when the destination is ready to receive them. This proactive mechanism prevents bottlenecks and packet drops that can occur in traditional architectures.

Unlike traditional RoCEv2-over-standard-Ethernet solutions, which rely on congestion mitigation to address issues after they occur, the combination of disaggregation and VOQ in Fabric-Scheduled Ethernet, alongside ECN/PFC mechanisms, applies congestion avoidance, proactively preventing congestion before it happens. This approach ensures smooth and efficient data flow, even in high-scale deployments.

How the industry can push RoCEv2 to new heights

RoCEv2 has proven to be a reliable transport protocol for modern data centers, but its reliance on PFC, timeout-based congestion control, and Go-Back-N retransmission reveals areas for improvement. By addressing these issues, the industry can push RoCEv2 to new heights, delivering even better performance, scalability, and efficiency for the most demanding workloads.

At DriveNets, we are redefining network architecture with a focus on congestion avoidance rather than congestion mitigation. By leveraging DDC architectures with Fabric-Scheduled Ethernet, we proactively prevent congestion before it arises, delivering predictable, lossless networking for RoCEv2. This approach eliminates the need for imperfect reactive solutions that attempt to address congestion after it occurs, ensuring superior performance and reliability at scale.

As we continue to innovate and push the boundaries of networking, we are eager to hear your perspectives. How do you envision the future evolution of RoCEv2 and AI-driven networking? Let’s drive the conversation forward together…

Key Takeaways:

- RoCEv2’s Role in Data Centers: RoCEv2 (RDMA over Converged Ethernet version 2) is widely adopted in modern data centers for its high throughput and low latency, essential for applications like AI training and large-scale analytics.

- Challenges with PFC Dependency: RoCEv2 relies on Priority Flow Control (PFC) to ensure lossless Ethernet communication. However, this dependence can lead to issues such as:

- Increased Buffer Requirements: Significant buffering is needed to manage paused traffic, potentially causing performance bottlenecks.

- PFC Storms and Congestion Spreading: Continuous pause frames can halt large network sections, affecting critical applications.

- Deadlocks: Circular dependencies from excessive PFC usage can lead to network deadlocks requiring manual intervention.

- Inefficiencies in Go-Back-N Retransmission: RoCEv2 employs a Go-Back-N retransmission strategy, where the loss of a single packet triggers the retransmission of that packet and all subsequent ones, even if they were received successfully. This approach leads to wasted bandwidth and increased latency.

- Advocating for Fabric-Scheduled Ethernet: To overcome RoCEv2’s limitations, adopting a fabric-scheduled Ethernet with Virtual Output Queuing (VOQ) is recommended. This architecture proactively prevents congestion, ensuring smooth and efficient data flow in high-scale deployments.

- DriveNets’ Innovative Approach: DriveNets is redefining network architecture by focusing on congestion avoidance rather than mitigation. Utilizing Disaggregated Distributed Chassis (DDC) architectures with Fabric-Scheduled Ethernet, DriveNets delivers predictable, lossless networking for RoCEv2, enhancing performance and reliability at scale.

Related content for AI networking infrastructure

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

White Paper

Fabric-Scheduled Ethernet as an Effective Backend Interconnect for Large AI Compute Clusters