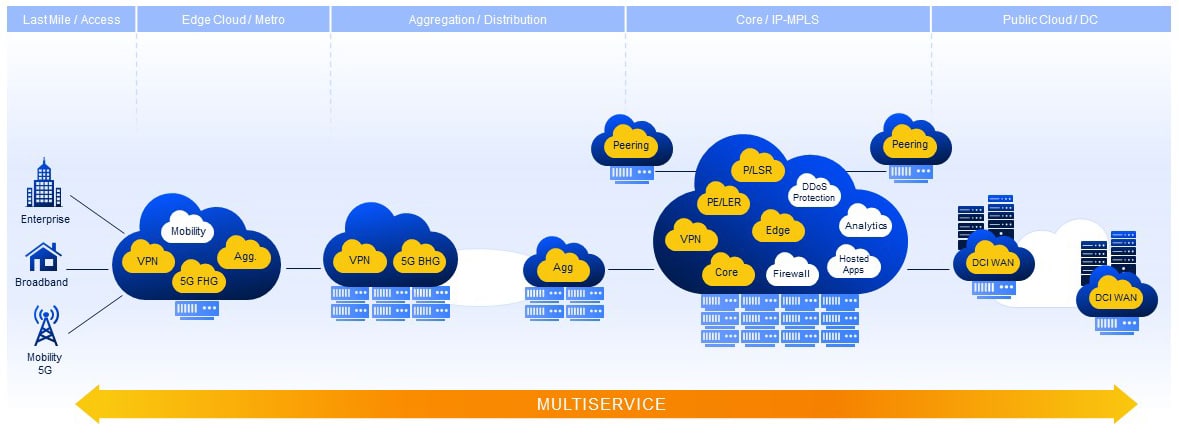

In my session, I presented DriveNets’ extremely broad portfolio of networking use cases, illustrated here:

A single architecture serving a wide range of networking use cases

The main thing that we need to understand here, is that all these use cases and network functions are implemented with the same product (or solution) – DriveNets Network Cloud.

Utilizing cloud-native software, running on top of networking optimized white boxes, with an abstraction layer that allows the use of any hardware resource for any networking function, this architecture serves a wide range of networking use cases – from your MPLS-only P router, PE router functionality, peering points, aggregation and distribution layer, layer-2 and layer-3 VPN to your edge-cloud, 5G gateways and DCI WAN connectivity.

This wide scope of use cases typically requires just as wide a range of solutions and products, each – optimized for a specific use case. In the case of Network Cloud, however, network functions are mounted on the shared infrastructure as service instances, allocated with the optimal set of resources and easily scaled, or even ported, across the network, according to the changing traffic patterns or capacity demand.

Those service instances are not necessarily developed by DriveNets, this architecture is built, just like a cloud, to host any 3rd party networking intense application. Those applications could be a firewall, DPI, DDoS mitigation of even 5G networking functions – all sharing the same infrastructure and leveraging an optimal allocation of resources.

Optimal scaling of any network site

While the architecture is identical in all network sites, the size is derived from the capacity requirements at any site. Still, the hardware and software building block, planning methodology and management practice is identical across all use cases and sizing. This allows optimal scaling of any network site from a 1.8Tbps single box solution to an extra-large cluster, serving up to 691Tbps.

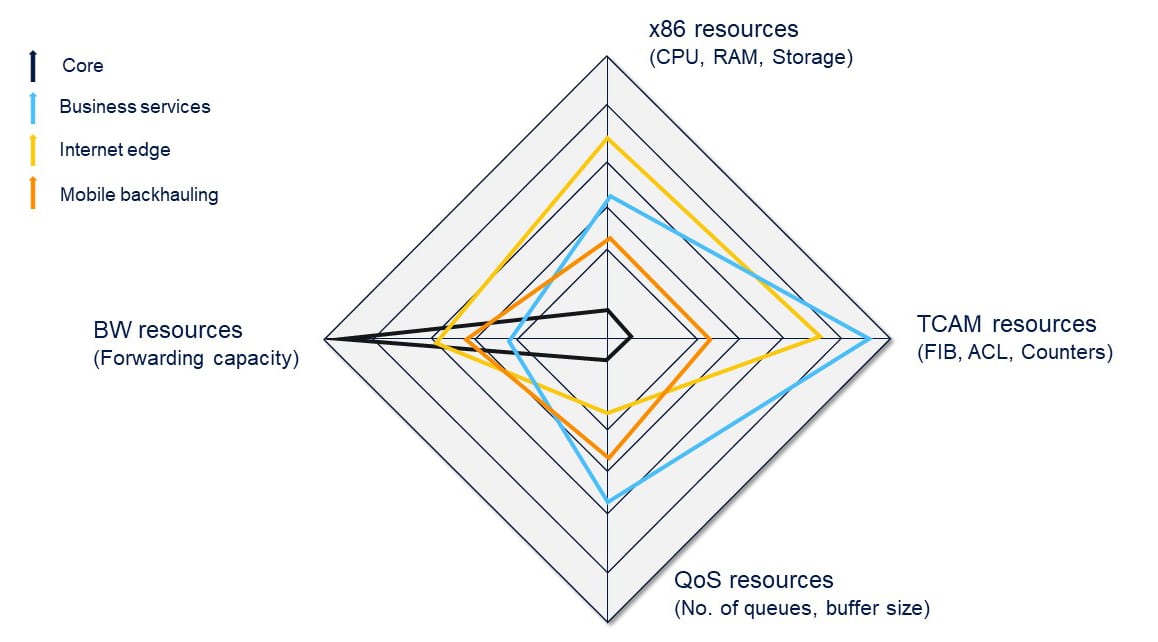

Shared resources for different networking functions

The fact that resources are shared between different networking functions, collocated on a shared infrastructure, creates huge savings and optimization, compared to a monolithic chassis. The classic chassis we are all familiar with has a fixed set of resources such as compute (x86, RAM, storage), bandwidth (forwarding capacity), TCAM (for FIB, ACL queries) and QoS (buffers, queues etc.). The thing is, most networking functions require a very specific set of resources. For instance, a core function (e.g., P router) requires intense bandwidth resources, but not a lot of x86, TCAM or QoS resources. An edge router, on the other hand, will require more x86 and TCAM resources but not that many bandwidth resources.

This means that there are wasted resources in almost any monolithic router deployed in your network. Resources you pay for, but cannot be used to their full potential since other resources are exhausted at an early stage.

In the Network Cloud architecture, those resources could be shared across multiple networking functions, co-located at the same cluster, hence leveraging an optimal use of resources, leading to improved performance and to a significant reduction on cost.