What Is AI Workload?

Since the introduction of ChatGPT – the “Eureka” moment of artificial intelligence (AI) – tech circles have been using AI as a buzzword more than ever. As a result, multiple related terms have been used more frequently, and “AI workload” is one of them.

AI workloads are the “engine rooms” behind artificial intelligence algorithms. They usually describe AI services and processes that are performed by underlying AI techniques, such as deep learning or machine learning.

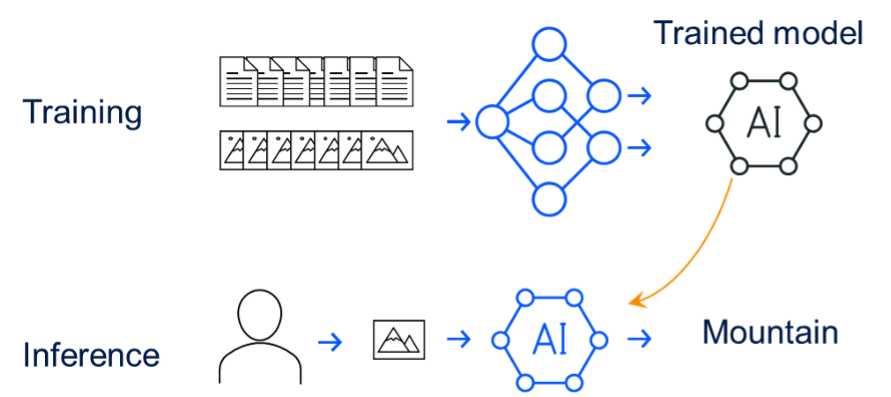

These processes include things like training AI models by feeding them a large amount of data and letting them learn to identify patterns or make predictions; running a trained AI model (inferencing) using new data (this is what ChatGPT does); and even optimizing AI models by adjusting models to improve performance.

Of course, computing tasks are not new; but AI workloads are distinguished by their complexity and the type of data processed. Workloads are named AI workloads when dealing with unstructured data like images, generic text, and other non-tagged and structured data. Analyzing unstructured data often requires the use of deep learning or machine learning algorithms.

What are the benefits of AI workloads?

The need for AI workloads arises from our demand for efficiency, insight, and innovation. These specialized computational tasks, powered by machine learning and trained on vast datasets, offer significant advantages:

- Automation: Repetitive, time-consuming tasks can be offloaded to AI, freeing human talent for strategic thinking and creative endeavors.

- Better decision-making: AI analyzes vast amounts of data, uncovering hidden patterns and generating predictions that guide better choices, be it in business, healthcare, or scientific research.

- Innovation: AI tackles challenges intractable for traditional methods, pushing the boundaries of what’s possible in fields like personalized medicine, environmental modeling, and autonomous vehicles.

What are the challenges of AI workloads?

Architects operating AI workloads need to tackle a number of key challenges:

- Data: AI models are as good as the data they are trained on. Low-volume data or inaccurate or biased data can lead to low-quality outcomes.

- Infrastructure: Training AI workloads requires high-performance GPUs in large numbers, which can be extremely expensive. For example, known platforms like ChatGPT and Bard use models trained by thousands of GPUs. Furthermore, these models require elasticity, and often have dynamic resource demands that are challenging to meet with most infrastructures.

- Ethics, security, and privacy: AI workloads handling sensitive data raises concerns about privacy breaches and misuse. Moreover, AI can lead to significant shifts in the job market, raising ethical concerns along with potential bias, which is hard to detect as many AI systems lack transparency and are often seen as “black boxes.”

- Performance: Traditional networking solutions can struggle with the high-bandwidth and low-latency requirements of AI workloads. Transferring large datasets from GPU to GPU under strict performance demands of low latency and jitter is not simple. Failure to meet these requirements can lead to insufficient job completion time (JCT) performance, resulting in a loss of valuable computing power.

- Scalability: Scalability is another challenge, especially for AI workloads training large language models (LLMs) using clusters of thousands of GPUs. Existing networks, designed for static workloads, struggle to adapt to the dynamic resource demands of AI, leading to performance degradation at scale due to multiple hops, jitters, and bottlenecks, which create latency issues.

DriveNets and AI workloads

AI workloads require networking solutions that are scalable, flexible, and able to adapt quickly to changing demands while remaining simple to operate. Currently, hyperscalers and other workload architects use separate networking solutions for their AI workloads and front-end networks. DriveNets Network Cloud-AI allows hyperscalers to utilize a single, versatile, and standardized networking solution for both ends while preserving their independence.

The DriveNets Network Cloud-AI solution offers the highest performance for AI networking. It boosts job completion times, even at a high scale, by improving fabric performance and optimizing hardware resources. Additionally, it enables interconnectivity through Ethernet technology, streamlining operations and reducing costs. Lastly, it removes vendor lock-in and maintains the flexibility of vendor choice.

Additional AI Workload Resources

Page

White Paper

- Utilizing Distributed Disaggregated Chassis (DDC) for Back-End AI Networking Fabric

- Resolving the AI Back-End Network Bottleneck with Network Cloud-AI

- Meeting the Challenges of the AI-Enabled Data Center: Reduce Job Completion Time in AI Clusters

- Analysis of Data Traffic Distribution Solutions

Blog

Video