|

Getting your Trinity Audio player ready...

|

Advantages of Distributed Disaggregated Chassis (DDC) for AI networking

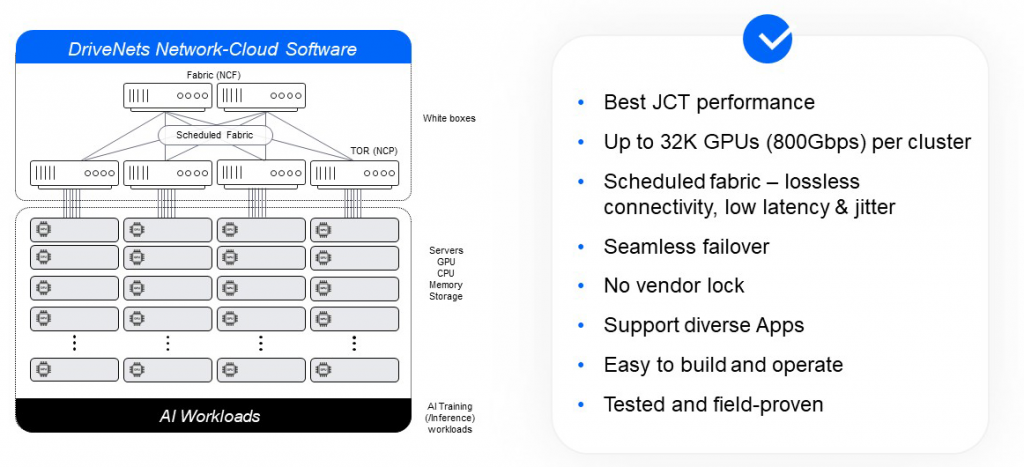

The DDC solution offers the best for AI back-end networking. It creates a single-Ethernet-hop architecture that is non-proprietary, flexible and scalable (up to 32,000 ports of 800Gbps). This yields AI workload job completion time (JCT) efficiency, as it provides lossless network performance while maintaining the easy-to-build Clos physical architecture.

Scalability

DDC offers unparalleled scalability for AI clusters. Traditional networking approaches often face limitations when it comes to scaling, as they rely on metal enclosures and all their inherent limitations. In contrast, DDC decouples the control and data planes, allowing for distributed and independent scaling of networking resources. This flexibility enables seamless expansion of AI clusters, accommodating the growing demand for processing power and data throughput. Building a single-stage DDC Clos, based on Broadcom’s Jericho2c+ and Ramon would enable up to 7000 200GE ports. With the new Jericho3-AI and Ramon-3 fabric, connectivity can scale to 32,000 GPUs, each with 800Gbps, in a single cluster.

Performance at scale

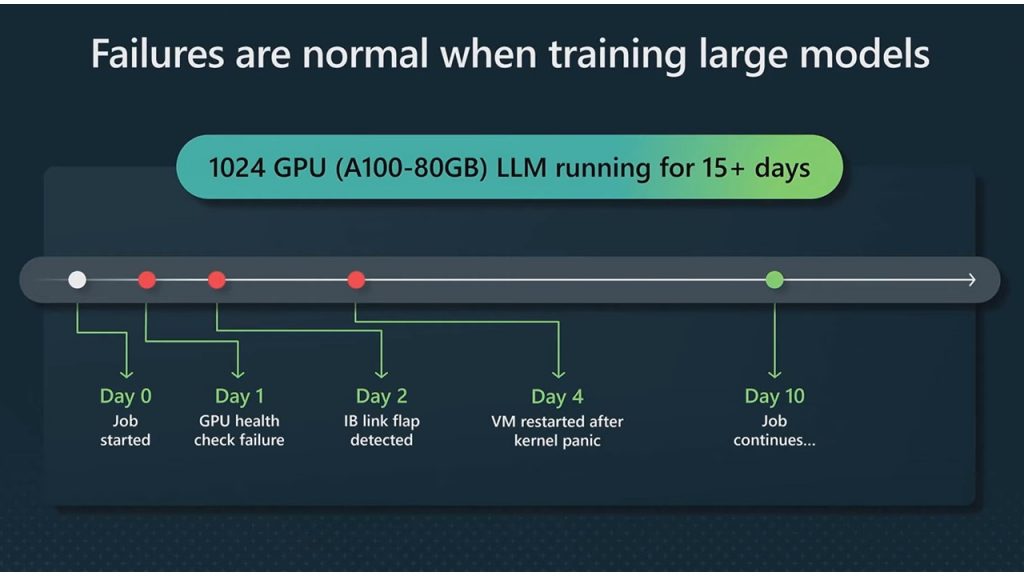

AI workloads demand high-speed data transfers, low-latency communication, and efficient resource utilization. In AI networks, any failure or delayed flow can hold back the progress of all nodes. Mark Russinovich, CTO of Microsoft Azure, revealed that in Azure’s Project Forge AI cluster ”failures are normal when training large models,” leading to deteriorating GPU utilization and idle resources.

Source: Microsoft Build 2023 Inside Azure Innovations – AI infrastructure

In order to reduce the AI clusters’ network failure rate, fully unleash their potential, and significantly increase GPU utilization while reducing AI workload job completion time (JCT), the AI back-end network should behave as a single logical Ethernet switch. Such a back-end network should feature fully scheduled, non-blocking load balancing, with high port radix – and that’s what DDC is all about.

Optimized network performance for AI workloads

Mitigating network recovery failure, DDC provides optimized network performance by distributing traffic across multiple switches acting as a single Ethernet node. The pull mechanism guarantees all traffic is fully scheduled and perfectly load-balanced across the fabric, making the DDC a perfect topology to carry predictable lossless traffic. DDC operates load balancing at a per sub-packet granularity. When a packet enters the system, it is segmented into fabric cells before being sent to the fabric. These cells are evenly distributed across all links of the fabric white boxes, ensuring equal distribution. The load balancing is independent of the quantity and sizes of flows and their entropy. By employing an end-to-end virtual output queuing (VOQ) mechanism that eliminates head-of-line blocking bottlenecks, DDC significantly reduces congestion and latency. This fabric connectivity results in faster job completion times and improved overall performance.

Apart from preventing congestion, DDC also offers seamless failover. As a software-based solution, DDC offers nano-second-level failover, real-time failure alert propagation, and hybrid centralized-distributed management. The failover switch ensures uninterrupted connectivity. The hybrid management system provides centralized control with distributed flexibility and robustness.

DriveNets Network Cloud-AI and the Distributed Disaggregated Chassis (DDC)

By leveraging DDC, DriveNets has revolutionized the way AI clusters are built and managed. DriveNets Network Cloud-AI is an innovative AI networking solution designed to maximize the utilization of AI infrastructures and improve the performance of large-scale AI workloads. Built on DriveNets Network Cloud – which is deployed in the world’s largest networks – DriveNets Network Cloud-AI has been validated by leading hyperscalers in recent trials as the most cost-effective Ethernet solution for AI networking.

Through its innovative DriveNets Network Cloud-AI solution, DriveNets enables organizations to leverage DDC to create highly scalable and efficient AI clusters.

DDC is a game changer for AI networking

The advantages of DDC networking architecture for AI are undeniable. With its scalability, performance @ scale, and cost efficiency, DDC has emerged as a game changer in networking for AI clusters. The DriveNets Network Cloud-AI solution takes full advantage of DDC for AI, empowering organizations to build and manage highly efficient AI clusters that meet the demands of today’s data-intensive workloads.

Download WHite Paper

Utilizing Distributed Disaggregated Chassis (DDC) for Back-End AI Networking Fabric