|

Getting your Trinity Audio player ready...

|

In a (very) simplified manner, you could say that the Clos topology is a crossbar of devices with predefined external ports and internal ports. An implementation, quite common actually, of Clos topology is one you can find in a chassis.

Well, accuracy tossed aside, when it comes to connecting AI clusters, these two options of Clos and chassis are clearly different. This blog post will run an interesting comparison between these two architectures when running AI applications.

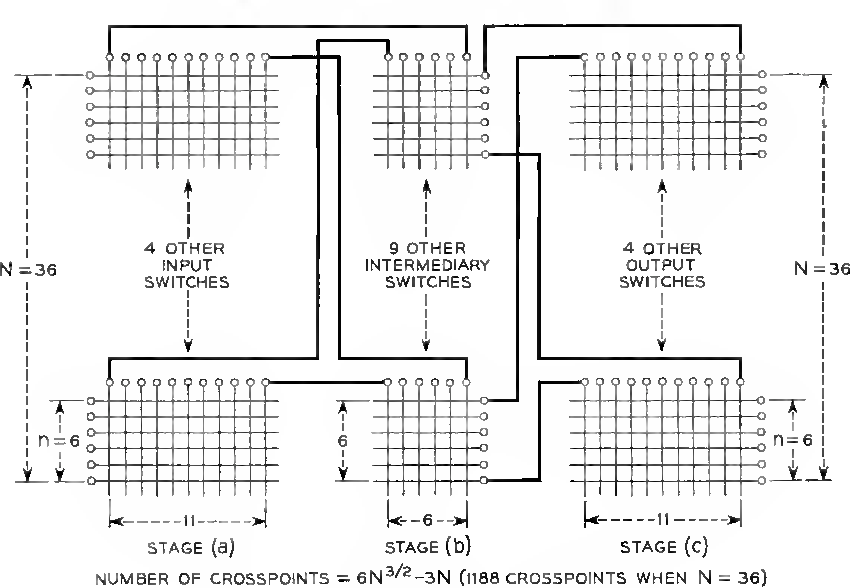

Figure #1: THE Clos Topology

(Source: “A Study of Non-Blocking Switching Networks,” Charles Clos)

What is Clos?

As mentioned in the intro, Clos describes a crossbar where you have an entry point into the crossbar, a crossing point, and an exit point after passing the crossbar.

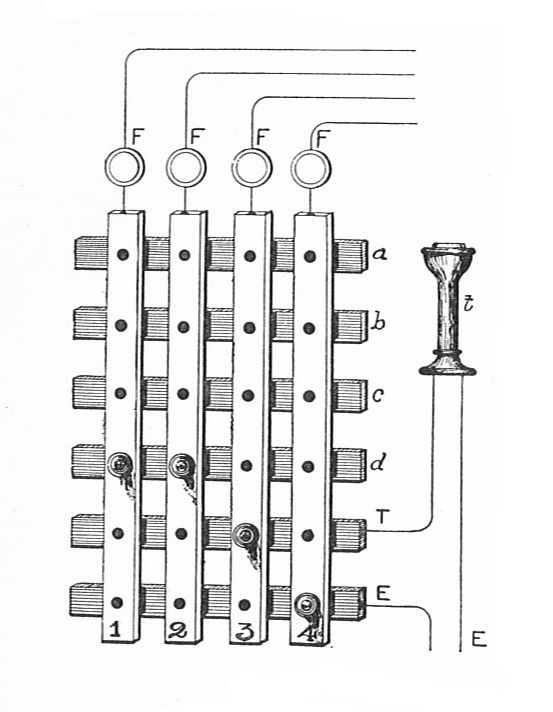

Figure #2: Crossbar Telephone Exchange

The pins mark the connection between ingress and egress

(Source: Wikipedia)

In AI terminology, a Clos is built to connect endpoint devices in a data center (built to run AI applications) where these endpoints are servers (network interface cards, or NICs, installed onto servers). The entry point into the Clos crossbar is the switch to which these servers are physically connected. Typically positioned at the top of the rack in which the servers are installed, it is (surprisingly) called a top-of-rack (ToR) switch. This ToR is then connected to several aggregation switches called spines or fabrics or tier-2 switches. It’s important to note that these spines connect to ToR switches only and to all the ToR switches in the network.

A flow of traffic entering the Clos crossbar will traverse a ToR, one of the spines, and another ToR before it reaches the destination server. This topology is considered non-blocking because the cross bisectional bandwidth of the crossbar (the aggregate BW of all spines) is equal to the aggregate BW of all endpoints facing ToR ports.

In theory, if all endpoints transmit full BW (for simplicity, let’s assume a traffic pattern that allows this) for a period of time, the crossbar has enough capacity so that all endpoints will receive full BW at that same time. The assumption here is that the selected spine for each flow is the “right” spine, so that when the crossbar is fully loaded all spines are at exactly 100% utilization. Unfortunately, this is impossible… even in theory. When building a Clos topology for data center connectivity, it is never the case that the entire data center is running maximal bandwidth in an any-to-any traffic pattern.

One item I didn’t mention in the above example is that this refers to Ethernet technology. In Ethernet, the entry ToR needs to decide per every flow regarding the spine that will be used. This flow management is based on hashing algorithms that are supposed to be as close to random as possible.

Another technology implementing the same topology is InfiniBand. In this case, the ToR’s decision for spine selection is based on additional information granted by an external brain with full visibility of the entire crossbar. This makes the full utilization of the crossbar’s BW more achievable but also requires knowhow regarding the types of flows running throughout the server’s cluster. Think about this as getting through a maze with a hovering camera giving you directions. While it gets you through the maze, you need a hovering camera giving you directions…

So when asked whether you use Clos or InfiniBand, the answer should be “yes.”

When asked whether you use Clos or a smart NIC-enabled network, the answer should be “yes.”

And when asked whether you use Clos or chassis, the answer should be, well, “yes.”

How are chassis built?

Here is a recipe:

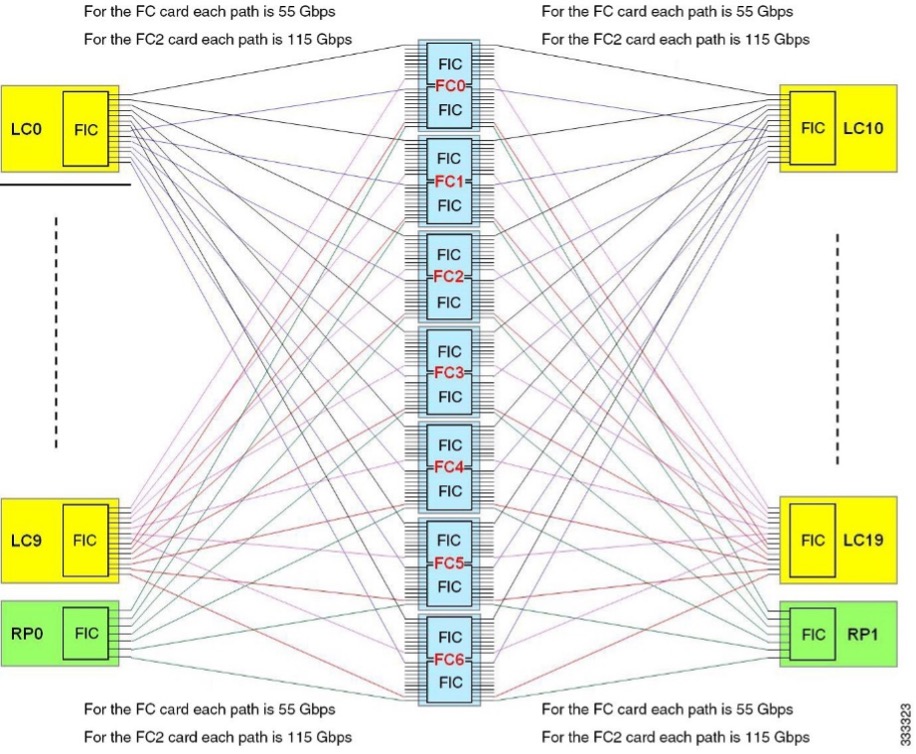

- One and usually two (for redundancy) routing engines that comprise the system’s brain

- Several power supplies and fans

- Line cards (as many as the chassis can hold) that connect to endpoints and spine cards

- Spine cards (or fabric cards) that only connect to line cards

- A physical crossbar that connects all line cards to all spine cards

- A metal enclosure to physically host all of these components

In four words, “chassis” is “Clos in a box.”

Figure #3: Internal Chassis Topology

(Source: 53bits)

Networking topologies are as good as their operational ease

So we’ve established that we are not really comparing the (similar) topologies of Clos and chassis. Now let’s look at the real attributes that make each model a preferred choice… starting with chassis.

Chassis is easy to handle, and it is very efficient when operating at its optimal working point. Single control plane. Single IP address. You can choose the size that fits your deployment and just fill it with line cards in a hot-insertion mode. There is only one vendor to point a finger at when anything goes wrong. And when run at full speed on all line cards and ports, its power consumption per gigabit of traffic is the best you will find.

The only problem is that this optimal working point is never reached. When a chassis hits ~70% utilization, a replacement is introduced because a traffic spike might get you above 100%. The rigid nature of the chassis doesn’t enable it to grow beyond its metal enclosure. You can start with the largest chassis possible, but this would mean that most of the time the chassis would be poorly utilized; when it reaches high utilization rates, you will be looking at a clutter of cabling spread across the entire data center all connected to this single device.

Chassis, however, grant the most predictable behavior due to built-in internal mechanisms, which can’t be implemented in generic networks. This is crucial for AI workloads.

Clos (the well-known Ethernet Clos in this case) shines exactly where chassis does not. It is composed of small (and typically lower cost) switches. It is very easy to grow and make changes in the network. Scaling is based on scale-out rather than scale-up. And you can work with multiple vendors when building your network, so you have better control of the supply chain (and eventually, price).

Performance, however, leaves something to be desired. A chassis takes flows and fragments them so they can be “sprayed” through all fabric elements (and reassembled on the receiving end); flow handling in a Clos is nowhere near as efficient as the internal mechanisms built into a chassis.

Also, every element in the Clos is also an element in the network. So in large deployments, you could end up with hundreds of devices to manage and a network between them that also needs to be managed, monitored, protected, troubleshot, and fixed. All of which should be done ASAP.

There is always a compromise when forced to choose. Service providers are more tilted toward the performance of a chassis, while data centers crave the scale of a Clos.

AI workloads running in a data center need both.

What is the Distributed Disaggregated Chassis (DDC) operational model

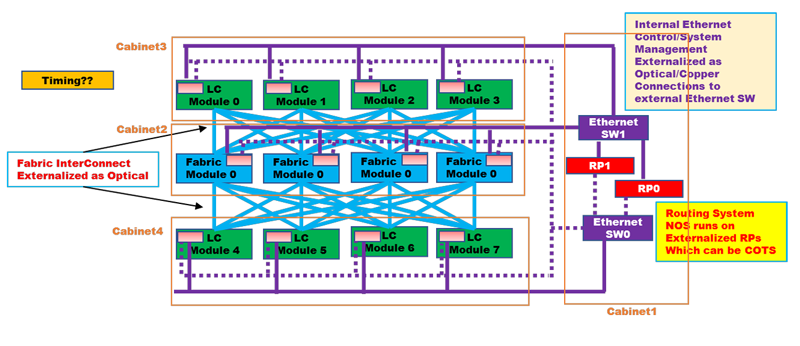

The Distributed Disaggregated Chassis (DDC) is in fact a chassis without a metal enclosure.  Figure #4: DDC Architecture

Figure #4: DDC Architecture

(Source: OCP Version 1 of DDC Spec)

DDC is built using the same components found in a chassis. In this case, it is not built into a single enclosure but distributed into several stand-alone devices that act as “line cards” and “spine (fabric) cards.” This disaggregation attribute enables these stand-alone devices to be purchased as standard white boxes, separate from the software that runs them.

So far this seems like the typical Clos, but DDC offers another element. This is the brain of the system (+1 for redundancy), which is where control over these white boxes happens and their mutual “knowledge” of the network is kept and shared. This means that the DDC behaves as a unified element in two key ways.

- First, it can be managed as a single network device.

- Second, it carries a single control plane and a very predictable behavior with traffic flows thanks to its segmentation and reassembly actions, same as in a chassis.

So DDC offers the best of both worlds. DDC has the Clos attributes of flexibility and scale, multi-vendor, and better customer control. It also offers the performance and behavior of a chassis, with a single point of management and a single control plane that promotes improved performance of the entire interconnect solution.

The best of Clos and chassis: The Distributed Disaggregated Chassis

We looked at the performance and management benefits of the chassis and found that if it wasn’t so rigid, it could scale better and be more flexible to tolerate changing demands, it would have been a great fit for AI networking. A distributed model such as Clos is perfect for enabling these missing attributes, and vendor (un)lock (or customer control) is granted by using standard white box designs.

That would mean that an optimal solution would be a distributed and disaggregated form of a chassis. I guess the folks at OCP knew what they were doing when they named it DDC.

Related content for AI networking architecture

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

Download

Utilizing Distributed Disaggregated Chassis (DDC) for Back-End AI Networking Fabric