|

Getting your Trinity Audio player ready...

|

Here are my three main takeaways from the event:

The rise of AI inference

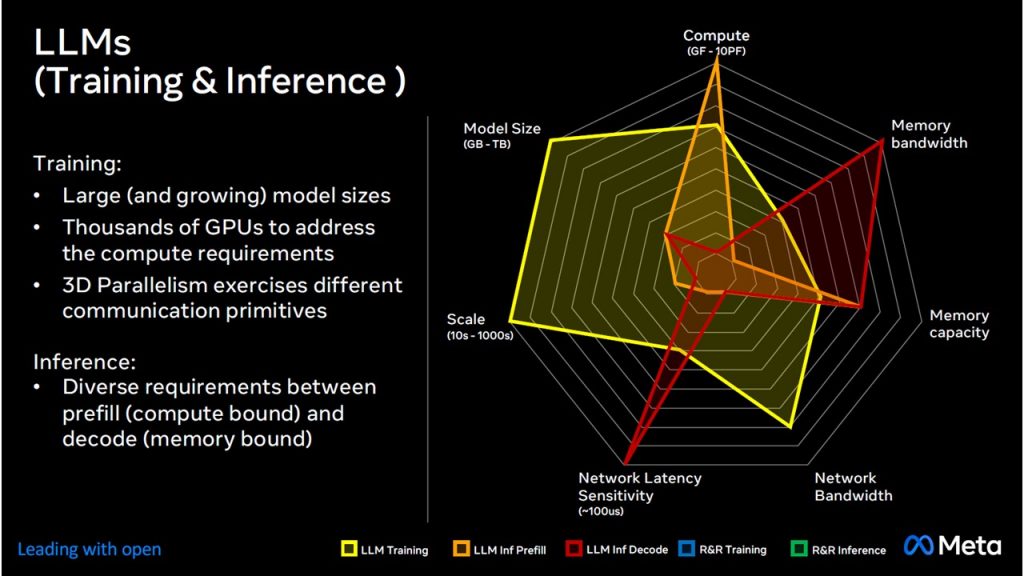

From an infrastructure perspective, most of the attention is directed to AI training. While training is considered more demanding and complicated, inference is a field that is projected to go faster. As Alexis Black Bjorlin, VP of Infrastructure at Meta mentioned in her keynote, training volume rises in accordance with the number of researchers, while inference volume rises in accordance with the number of users.

There is a different resource requirement mix between training and the different phases of inference, as illustrated in this slide from Meta:

Source: Meta

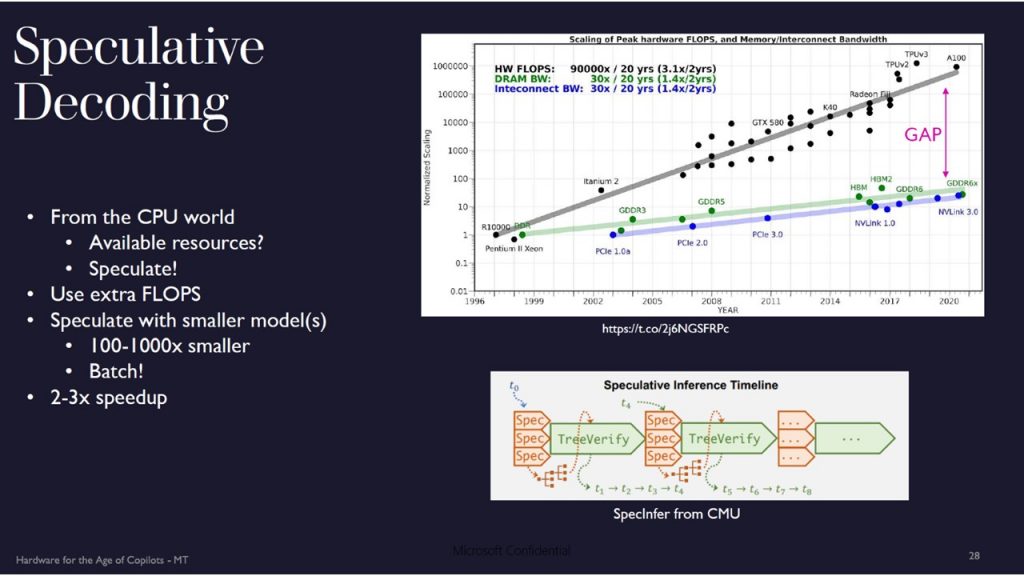

There is also more flexibility when it comes to inference; for instance, speculative decoding can be used in order to mitigate the famous compute-infrastructure capabilities gap, as illustrated by Marc Tremblay, VP at the CTO Office of Microsoft:

Source: Microsoft

Inference is also the leading function when it comes to AI at the edge.

Getting to the network edge

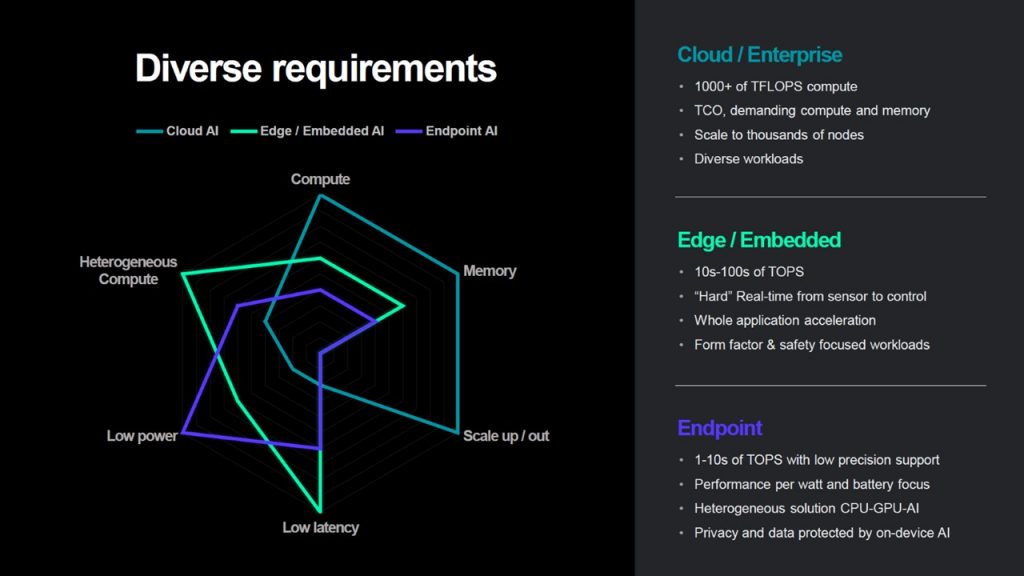

Speaking of edge, it was a very “busy” word during the event. It is widely recognized that AI and ML cannot stay at the data center, and they are making their way into the edge and endpoints. This is important for accelerated and better inference experience, but also for real-time training “in the field.”

Vamsi Boppana, SVP of AI at AMD, captured the main differences – in terms of applications, methodologies, required resources and scale – between running AI/ML on the cloud or enterprise level, vs. similar implementations at the edge, or on endpoints.

Source: AMD

The underlying bottleneck for AI infrastructure

There is an underlying bottleneck for AI infrastructure that is gaining more and more attention. It has to do with anything that “feeds the beast.”

As mentioned in my previous post, Next Generation System Design for Maximizing ML Performance, another major item that received massive attention is the underlying bottleneck for AI infrastructure. When thinking of network bottlenecks, you typically consider the ability to acquire and manufacture GPUs. But even if you, as someone who builds AI clusters, have those GPUs at hand, an underlying bottleneck may prevent you from utilizing those costly resources. Such bottlenecks may include an insufficient power plant, poorly designed cooling, or an underperforming networking fabric.

There’s an assertion that a high-performance AI back-end networking fabric (such as DriveNets Network Cloud-AI) can “pay for itself.” This is indeed the case as it improves GPU performance in terms of JCT (job completion time) by more than 10% (which more than covers its cost). While cost is important, it is not the only consideration. As mentioned above, power and cooling often are limiting factors when building AI infrastructure. As such, you must implement the best infrastructure mix to use less GPUs for the required task while taking power into consideration.

Related content for AI networking architecture

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

Download

Utilizing Distributed Disaggregated Chassis (DDC) for Back-End AI Networking Fabric