So how did we solve the challenges that we faced in creating large scale clusters?

- x86 chip: runs the logical functions in both the server and the white box

- Switching ASIC in the white box: services are exposed by the Data-plane docker container

- Platform and board control mechanisms: services are exposed by the Platfrom docker container for managing the platform life cycle and peripherials (e.g Firmaware updates, Controlling LEDs, fans, power supply units, etc)

- Our base network operating system was based on Linux with an orchestration agent that allows us to control and orchestrate services onto the platform

Handling all Control and Management Mechanisms

The controller function, NCC, can run on a white box (in a standalone router) or on an x86 server (in a cluster router) and typically runs in a redundancy scheme, much like the RP in a chassis-based system. The NCC handles all the control and management mechanisms of the router and includes the following components, on top of the hardware abstraction layer, Base OS and element orchestration layers:

- Northbound interfaces allow the NCC to interact with external systems: a single CLI regardless of the number of white boxes, NetConf providing the ability to configure the device using OpenConfig YANGs, SNMP, syslog, accounting mechanisms (TACACS, RADIUS), RESTful APIs for automation and gRPC (OpenConfig gRPC Network Management Interface – gNMI) for performance management at scale.

- Configuration engine: where the router’s configuration is stored, supporting separate candidate and running configurations.

- Cluster manager: controls all the router elements (e.g. the health check mechanisms, activation of the failsafe mechanism on the white box, resetting it, etc.)

- Operations manager: handles high availability, interfaces, and statistics coming from each NCP independently.

- Routing engine: handles all the routing protocols (BGP, OSPF, IS-IS), traffic engineering protocols (RSVP, segment routing), BGP link state exposing the topology to SDN controllers, PCEP enabling tunnel control, the RIB, storing all the routing information prior to installing routes to the NCPs, and the FIB distribution mechanism handling the logic for installing the routing information on the NCPs.

- Data-path manager: the centralized management of the data plane enables, for example, how to manage bundle interfaces spanning across multiple NCPs and deciding when to bring down the bundle interface if any bundle member goes down.

NCP and NCF

- The chip SDK: The only place where the software interfaces with the Broadcom SDK, allowing easy integration with other chips and the board support packets (BSP) coming from the hardware for the control of the fan speed, the optics, the power supply units, IPMI, firmware upgrade, etc.

- NCP: The data-path manager, where services like BFD, LACP, access-lists, etc. are handled locally on the NCP level sparing the controller from overloading, circumventing the convergence of the control plane, and optimizing the use of the compute and ASIC resources at scale.

- NCF: The fabric manager provisions the RAMON chip.

Creating a Cluster

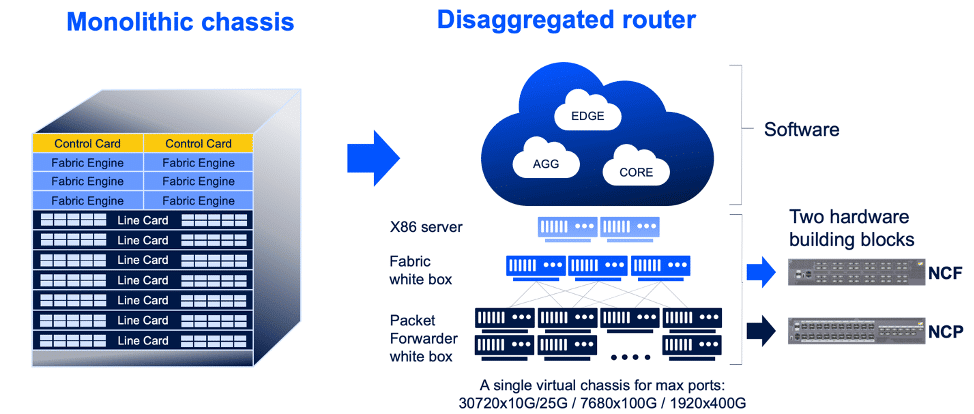

By creating a cluster, the distributed software architecture provides an end-to-end VoQ networking solution. The Network Cloud Packet Forwarder (NCP) is the equivalent of a line card. The Network Cloud Fabric (NCF) is the equivalent of the fabric, interconnecting all the NCPs. The data backplane is based on 400GbE optics (active optical, active electrical, or real optical cables, depending on the installation environment and the desired price).

The Network Cloud Controller (NCC) is based on x86 servers and connects to each cluster device through its 100GbE interface connected to the 10GbE interfaces on the white boxes, creating the management and control backplane.

Once these boxes are installed and powered up, the cluster is orchestrated internally through a call home mechanism of the white boxes to the controller, triggering a zero-touch provisioning command to download the software packages to the boxes, thus preserving the simplicity of the chassis-based router, where the software on the RP trickles down to all the line cards. Once the provisioning is complete, the cluster acts as a single network element with a single CLI, from which you can control and view every single cluster element with the same simplicity of the chassis-based router (e.g. virtual chassis view, interfaces view, backplane view, showing the interconnections of the devices compared to the connection template, etc.).

It’s All About the Cluster!

The DNOS best-in-class enabling architecture was built for scale, service growth and orchestration. It completely reinvents the router architecture by basing it on the web scale architecture of the cloud. DNOS is distributed software that enable resource optimization and simplification while still behaving like the familiar monolithic router.

Watch the full video that I presented at Networking Field Day, February 12, 2020 below:

Download White Paper

Introducing DriveNets Network Cloud