|

Getting your Trinity Audio player ready...

|

AI cluster performance and networking fabric

Connectivity plays a major role in helping AI clusters, used for either training or inference to achieve their highest performance. The connectivity fabric can affect overall application JCT performance – for better or for worse. Two main networking issues can significantly degrade performance (i.e. increase JCT):

- Inconsistent/unpredictable performance

- Slow network failure recovery

In this blog, we will discuss the issue of inconsistent/unpredictable performance.

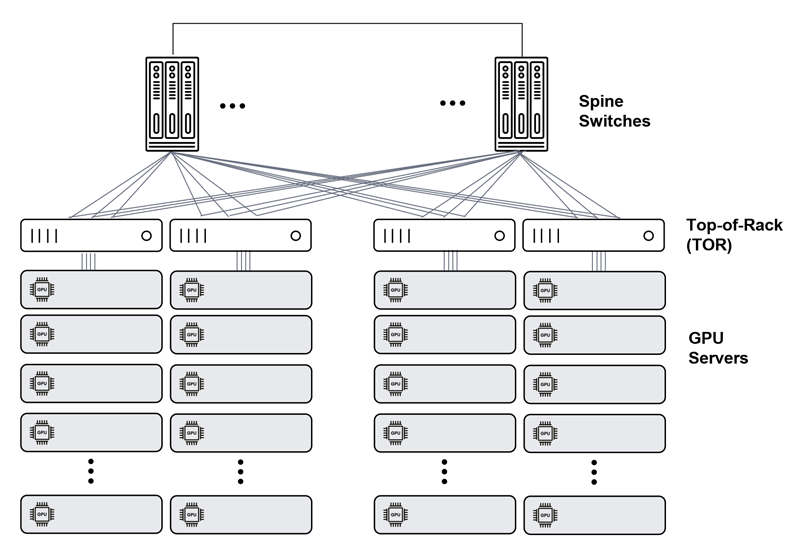

The Ethernet network’s inherent flaw at scale

When building an AI fabric as an Ethernet network in a classic Clos-3 or Clos-5 architecture, the resulting fabric performance is inherently lossy. That is, as network utilization increases, the network starts to experience unpredictable and inconsistent performance with increased latency AI Networking, jitter (latency variation), and packet loss. This is due to congestion, head-of-line (HoL) blocking at egress ports, uneven distribution of traffic across the network, incast scenarios, and other architectural network bottlenecks. This results in GPU idle cycles, since they await network feeds to continue computation, and eventually reach a higher JCT score.

Clos AI fabric

This is, as mentioned, an inherent quality of an Ethernet network. To some extent, it could be mitigated by utilizing various techniques such as advanced telemetry, but this will only change the load factor in the event of severe performance issues. In any case, in a high-utilization environment such as the AI cluster backend fabric, this type of network is doomed to underperform.

The need for predictable, lossless Ethernet fabric

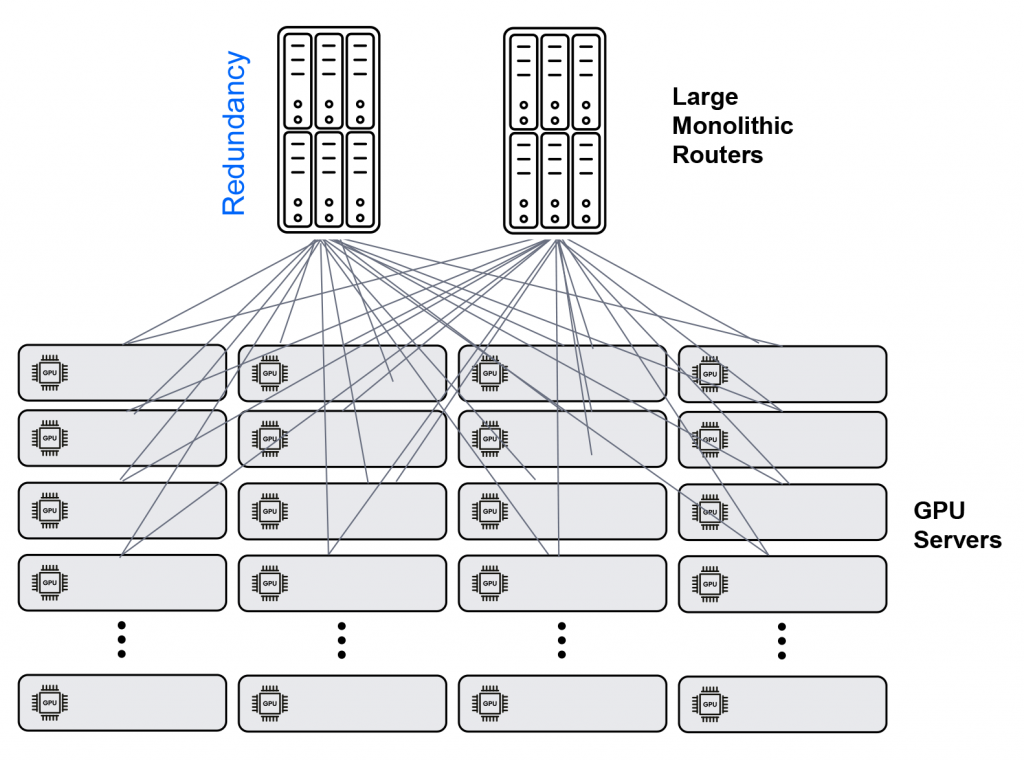

Now that we have established that an Ethernet network is doomed to underperform, and as a result, AI cluster computation performance is reduced, what about an Ethernet node?

Here the issue is not performance. A single Ethernet node (be it a pizza box or chassis) is typically non-blocking and performs well, even under high utilization. The issue here is scale, since the need for thousands, and in recent AI cluster buildouts, tens of thousands of network ports (take Google’s 26K GPUs A3 AI-supercomputer, for instance), makes it impossible to use a single chassis for this fabric. That’s because no such chassis exists today. And even if there were one, it would be practically impossible to attach hundreds of racks directly into such a chassis without an intermediate ToR switch.

Large chassis

A predictable, lossless connectivity fabric for the AI cluster

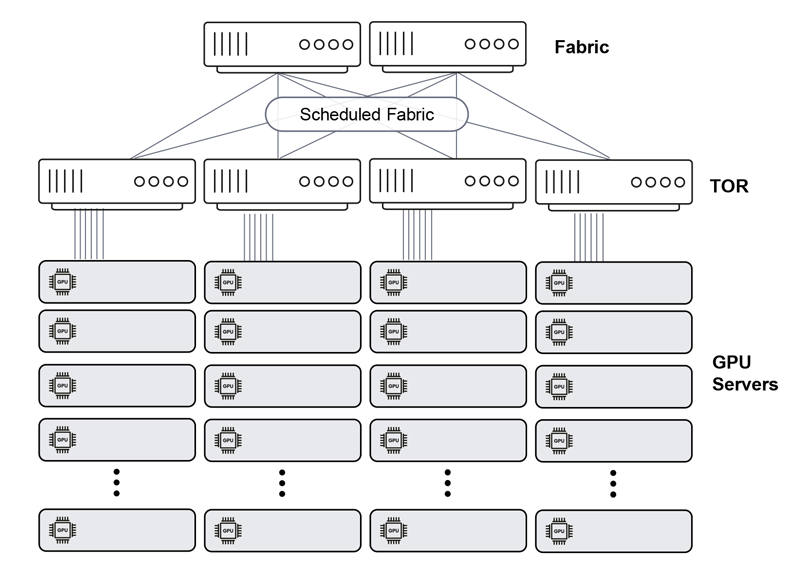

With Disaggregated Distributed Chassis (DDC), we distribute the chassis elements, specifically line cards and fabric functions, across standalone boxes that are connected in a Clos-3 architecture.

But now we’re back to problematic Clos architecture. So, do we have a lossy network again? Does ATM ring a bell? Not the one with the cash; the one with the cells (If so, you are probably as old as I am.)

Anyhow, asynchronous transfer mode (ATM) was (and still is?) a networking technology that utilized cells (instead of packets or frames) at a constant size (53 bytes, if you’re nostalgic in that way) to create a scheduled, lossless high capacity/high performance fabric.

In DDC, cells are back. The interconnect between the various white boxes is based on a cell-based protocol (a modern version of ATM, if you will) that creates a scheduled fabric that provides a lossless, predictable connectivity fabric for the AI cluster.

DDC fabric

How does the Distributed Disaggregated Chassis (DDC) work?

There are two main mechanisms that enable the fabric to provide the required QoS for an AI cluster. It’s true that these mechanisms are used in chassis-based switches, but the big news here is that they are utilized outside of a single box.

- Equal, granular traffic distribution: “Cutting” the ingress frames into equal size cells enables a “spraying” mechanism that distributes the cells across the entire fabric. This yields a perfectly balanced fabric that does not suffer from mice-/elephant-flow unbalanced distribution.

- Virtual output queues (VoQ) on ingress ports: Normally, ingress traffic is sent according to the switching information based to the egress port, where it is queued. However, this causes HoL blocking that results in latency, jitter and possibly packet drops. With VoQ, any ingress port holds a separate (virtual output) queue for any (relevant) egress port in which it holds all the packets destined for this port. The packet is sent through the fabric only after the port signals that it has the resources to handle this packet. As such, no HoL blocking or buffer overflow occurs in the egress ports.

Predictable, lossless interconnect fabric: perfect for large AI clusters

AI has stirred up a battle between Ethernet and InfiniBand. Although Ethernet is the world’s most popular networking technology, as explained above, it has a few performance issues that need to be resolved before it can be a good fit for AI cluster interconnect fabric. While few vendors solve these issues by mitigating the lossy nature of Ethernet, DriveNets Network Cloud-AI does it by practically eliminating its lossy nature. Predictable, lossless interconnect fabric is ultimately the perfect fit for large AI clusters.

Download White Paper

Utilizing Distributed Disaggregated Chassis (DDC) for Back-End AI Networking Fabric