|

Getting your Trinity Audio player ready...

|

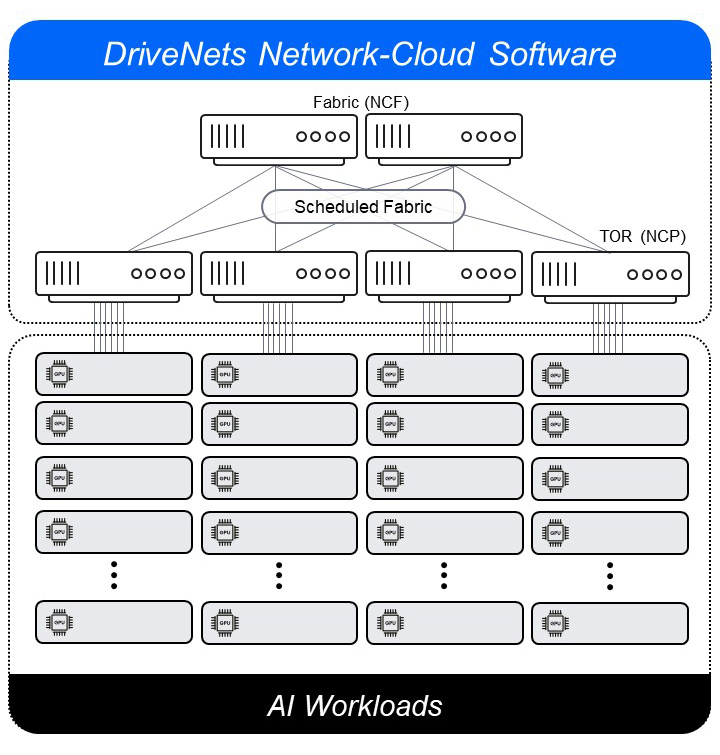

At DriveNets, we remain committed to precisely delivering that through our Ethernet-based solution. Our Network Cloud-AI, introduced in May, is well-equipped to handle the ever-expanding AI workloads demand and deliver high performance at scale. We achieved this through our Distributed Disaggregated Chassis (DDC) architecture and white boxes based on Broadcom’s Jericho2C+.

Today, we are strengthening our commitment to high performance at scale as the previously introduced Broadcom’s Jericho3AI and the Ramon 3 based white boxes are available for order.

Jericho3AI based white boxes meets unique demands for AI networking

DriveNets’ recently available white boxes, based on Broadcom’s latest silicons, raises the standards for AI clusters. AI workloads and especially generative AI workloads have several unique networking requirements that differ from other workloads. These differences are due to the nature of AI workloads, which are often highly compute-intensive and require the rapid exchange of large, long-lived flows, between nodes.

The new white boxes, with their unique properties, are purpose-built to meet these requirements, enabling AI infrastructure builders to harness top-notch performance and exclusive features tailored for AI workloads

The main features of network cloud fabric (NCF) for AI:

- Based Accton’s Ramon3 System

- 51.2 Tbps with High-radix 128 x 800G OSFP fabric ports

- Open Ethernet ecosystem

- Efficient cell-based switching

- Low power, low latency

The main features of network cloud packet forwarder (NCP) for AI:

- Based on Accton’s Jericho3 System

- 14.4Tbps – 18 x 800G OSFP network interface ports & 20X 800G OSFP fabric interface ports

- Support 400G GPU clusters and future 800G GPU clusters by software upgrade

- Open Ethernet ecosystem

- Efficient cell-based switching

- Low power, low latency

Accton’s new Jericho3 and Ramon3 VoQ Fabric Systems, presented at the DriveNets booth at the OCP summit, 2023

DriveNets’ Network Cloud-AI software can harness the cutting-edge white boxes’ power, enabling hyperscale companies and other AI infrastructure builders to establish a more open and versatile AI infrastructure.

This innovative solution offers:

- Scalability: The Jericho3AI fabric can scale up to 32K GPUs (800Gbps) per cluster.

- Optimal Network Utilization: distributes traffic across all fabric links, ensuring efficient use at all times.

- Fully Scheduled Fabric: This guarantees congestion-free operation with end-to-end traffic scheduling, eliminating flow collisions and jitter.

- Zero-Impact Failover: Achieves sub-10ns automatic path convergence, enhancing Job Completion Time (JCT).

Leverage the benefits of DDC in AI networking with Jericho3AI

DriveNets position themselves as leaders in innovative networking solutions, and as such, we are committed to delivering the best networking solutions. DriveNets already provides a DDC networking infrastructure solutions for all network use cases – from the core to edge and we power the world’s largest software-based network. Leveraging our extensive DDC experience, we’ve introduced a new, open, multi-vendor AI infrastructure that effectively addresses the primary challenges and trade-offs associated with AI workloads.

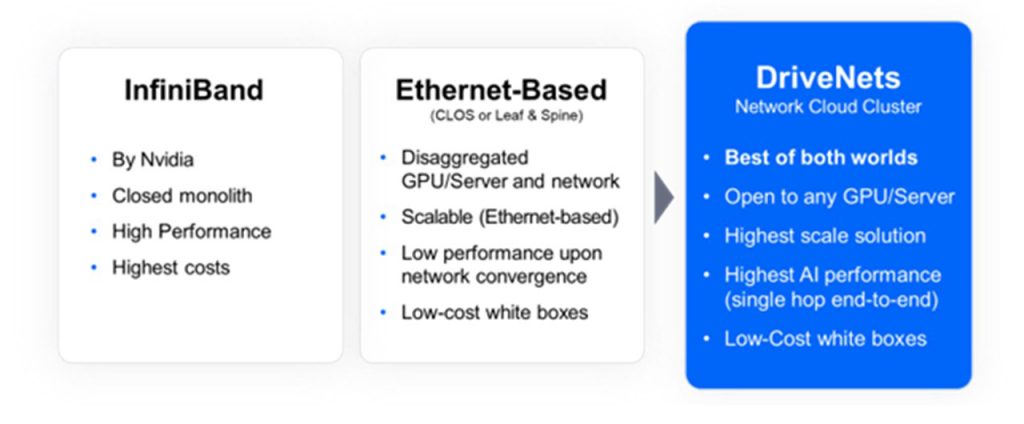

Proprietary options like Nvidia’s InfiniBand lack interoperability and result in vendor lock-in, while traditional Ethernet architecture struggles to scale high-performance AI workloads. DriveNets Network Cloud-AI presents the optimal solution. It increases JCT performance, enhances resource utilization, enables interoperability via standard Ethernet, and offers vendor choice.

DriveNets Network Cloud-AI – The best of both worlds

With the new hardware, AI infrastructure builders can utilize DriveNets Network Cloud-AI to fully leverage the benefits of the DDC architecture in AI networking and the capabilities of Jericho3AI.

DriveNets Network Cloud-AI

Here are the key advantages of DriveNets Network Cloud-AI for hyperscale companies and other AI infrastructure builders:

- Superior Performance and Efficiency:

DriveNets Network Cloud-AI software can now scale up to 32K GPUs in a single cluster, providing reliable connectivity, low latency, and minimal jitter.

- Open and flexible AI networking solution:

DriveNets’ Network Cloud-AI, based on Distributed and Disaggregated Chassis, is hardware-agnostic, enabling AI infrastructure builders to use diverse GPUs, ODMs and ASICs.

- Reduce cost and simplify operations:

DriveNets Network Cloud cuts AI networking costs, improving operations and reducing cluster expenses by up to 10%. Moreover, DriveNets provides a globally recognized Ethernet-based solution that demands no specialized skills or expensive resources, unlike InfiniBand

- Proven AI networking solution

High-scale live network deployments and successful PoCs with hyperscalers demonstrate the reliability and performance of DriveNets’ solution. Network Cloud-AI can provide a risk-free path for adopting a high-performance, standardized Ethernet AI networking solution

AI Networking with Broadcom Jericho3AI and Ramon 3 based white boxes

This recent joint effort with the availability of Accton white boxes powered with Broadcom’s Jericho3AI and Ramon 3 based white boxes, represents another significant milestone in DriveNets’ ongoing commitment to providing hyperscalers and other AI infrastructure builders with a networking solution that eliminates trade-offs.

DriveNets Network Cloud-AI software leverages the cutting-edge capabilities of the new hardware to provide the ultimate AI performance at scale. It boosts JCT at scales up to 32K GPUs in one cluster, enhances resource utilization, facilitates standard Ethernet for seamless interoperability, and provides freedom of choice among vendors.

Related content for AI networking architecture

DriveNets AI Networking Solution

Latest Resources on AI Networking: Videos, White Papers, etc

Recent AI Networking blog posts from DriveNets AI networking infrastructure experts

Download White Paper

Distributed Disaggregated Chassis (DDC) as an Effective Interconnect for Large AI Compute Clusters