In a way, building a compute array has the same logic. Just pick the strongest compute building block and connect it to a network, and then just add more of them until you get the strongest compute engine in the world. If you get the joke, you can read on till the end of this blog post; if you don’t get the joke, you must read on till the end of this post.

Why do we have congestion in AI workloads?

A compute array runs a distributed application. The workload processed by that application is wisely divided among multiple machines. This is typically done by a job scheduler that is usually an automated tool; the job scheduler should be aware of the array’s state in every given moment as well as the capabilities of each machine and its availability to take part in the next dispatched job. That’s a complicated sentence – but hey, it’s a complicated task.

A naive implementation will be one that uses a trivial scheduler, which either is not fully automated or lacks the ability to allocate a fine portion of the compute engine. A great implementation will have all the attributes mentioned in the previous paragraph.

Once a job starts, data flows within the array. The traffic pattern, data size and duration all depend on the running job. It’s up to the network (the thing that connects those hundreds of compute devices) to provide the adequate resources that will enable the shortest job completion time (JCT).

You alert readers may have noticed that the scheduler doesn’t do anything about the network besides assume that it’s perfect. Anything below “perfect” will result in network nodes getting more traffic than they can handle – a phenomenon known as network congestion.

AI networking options

Traditional data centers are connected to a naive Ethernet network. The utilization of links in such data centers and the impact of a packet being delayed or lost are minimal. Such networks are built with simple (and low-cost) Ethernet hardware and run free open-source software, which makes sense in a use case where failure impact is minute.

Taking this naive Ethernet approach for use as the interconnect in a large compute array – where traffic patterns are much larger and the impact of flaws is huge – is very expensive. Compute devices are costly. A flawed network causes these compute elements to work in idle. In an array worth $100M, 20% idle time is $20M of wasted CapEx and OpEx. If the network cost is $1M (for simple boxes and free software), its actual cost is $21M. Conversely, a $10M network that cuts down the idle compute cycles to 5% has an actual cost of $15M, which is in fact $6M cheaper than the naive Ethernet option.

Several technologies exist to replace naive Ethernet. Some are proprietary like InfiniBand, Fiber Channel and emulated Ethernet, and some are attempts to improve Ethernet to address network needs.

Two types of solutions stand out as the leading options from an adoption point of view. One is a smart network interface card (NIC), which holds network data and applies decisions on the link between servers and top-of-rack (ToR) switches in order to improve throughput. The other is a scheduled fabric, which provides a “blind” bandwidth pipe between all ToR devices regardless of traffic patterns.

Congestion is real

Congestion is real and there are multiple solutions trying to solve it. With AI models growing larger and larger, the growth of compute engines (typically GPUs) is insufficient, and the network between GPUs is becoming a crucial factor. The two leading options are smart NICs and scheduled fabric. Let’s take a closer look at these two solutions.

Smart NICs

One option that’s becoming predominant is the use of smart, network-aware network interface cards (NICs). They receive telemetry data sent to them from network elements either directly or via third-party agents. They then translate these inputs into insights that trigger flow management decisions, which can impact how traffic is spread throughout the network. In this way, many naive cases of congestion can be avoided. So the network becomes better utilized and compute utilization percentages increase.

Yet there are also cons to this solution. These NICs are pricey, and they heavily boost power consumption. They are offered by a few vendors, with different implementation and unique telemetry, hindering interoperability. The generated insights are also vendor-specific.

Above all, this solution is relevant for specific well-known AI workloads, while future workloads will require recalibration. If your workloads evolve, you will need to pour in more engineering resources; besides the money, this also means longer time to market. If your workloads do not evolve, then you’d better wake up before you find yourself lagging behind the industry.

AI workloads and models always evolve. Unlike the field of high-performance computing (HPC), which is similar in its demands from the network, the world of AI is rampaging with innovation and evolving use cases. Evolution is the name of the game and smart NICs are likely to pull you back.

Scheduled fabric

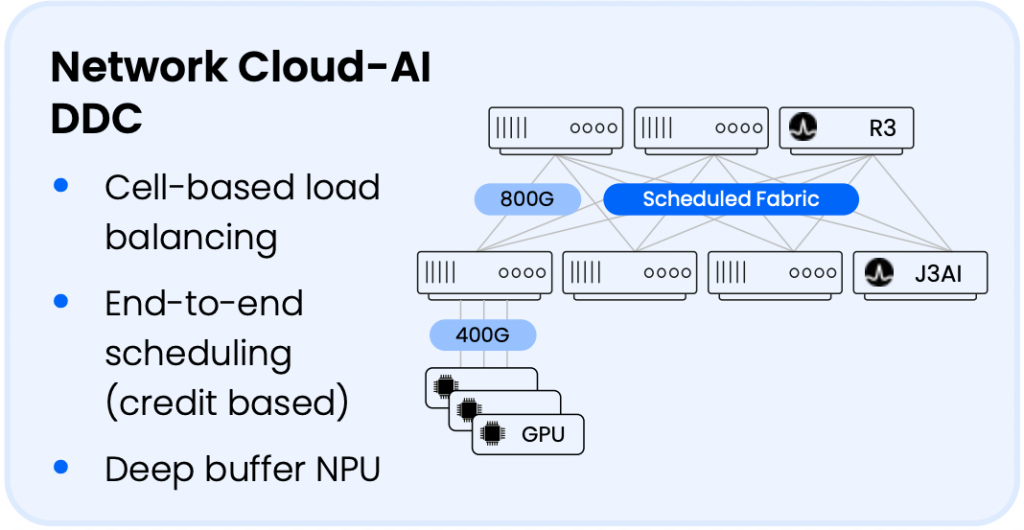

A scheduled fabric interfaces to the compute device via standard Ethernet. Between all the elements of the fabric, connectivity is based on hardware signaling rather than the software-based implementation of network protocols. This means that the entire fabric behaves like a single network element with congestion prevention mechanisms such as virtual output queue (VOQ) and credit-based scheduling.

Preventing congestion will always outperform detecting congestion and carrying out remediation. This is simply because detection takes some time and remediation also takes some time, leading to halted traffic during this accumulated time.

Since we eliminated congestion from inside the fabric, congestion can only occur on the final link connecting the compute endpoints from NIC to top-of-rack (ToR) device. Since this last link is fixed and attributed to a single compute element, this is a resource that is inherently controlled from a bandwidth perspective by either the GPU or the scheduler; congestion to that link reflects a configuration error.

Clearly, compute utilization is maximized in such a manner, yet there are still some drawbacks to this solution. The network devices constructing the fabric are more advanced (and hence costly) than naive Ethernet switches, and the software running on these devices is also a price boost. The scale of such solutions is peaking at 32K endpoints of 800G (as of today), which is very high but there are cases where this limit could be reached. Lastly, while this solution involves standard Ethernet towards other devices, it also involves a non-standard fabric between fabric elements.

Had this been a chassis with 32K ports, you’d probably not care about the fact that the insides of the chassis are proprietary. However, since it is a distributed solution of stand-alone elements, this becomes a burden on your conscience.

Since a distributed fabric solution is implemented with standard Ethernet towards the servers, any Ethernet NIC can be used with such deployments. This can include smart NICs as well for an extra performance boost, although we haven’t really seen meaningful improvements with this combination. Perhaps in the future when smart NICs evolve further.

Questions to ask for choosing an AI networking solution

Assuming you’ve narrowed it down to the two options described here, there are some questions you need to ask before choosing your solution:

- What is the overall power consumption of the interconnect solution from NIC to NIC?

- What kind of variety of workloads is expected on your compute array?

- Are you expecting additional workloads during the life expectancy of this infrastructure?

- Do you have the know-how to run a full-blown open-source network operating system (NOS)?

- Do you have the required skillset for calibrating a smart NIC to specific needs?

- Are you willing to wait for a solution to evolve while the industry is rampaging ahead?

Choosing an AI networking solution can be incremental

There are different needs for which compute arrays are built. Choosing a proprietary solution that leads to vendor lock-in and/or betting on a solution that is known to degrade compute performance are clear nonstarters. Enhanced Ethernet solutions come in different flavors, and there are several criteria by which you can assess which is the best for your needs.

One last point – you don’t have to make a hard and final decision. We see cases where multiple solutions reach deployment with the same customer alongside the DriveNets Network Cloud-AI solution, we also see InfiniBand and even last instances of naive Ethernet in deployment while smart NIC-based solutions are being evaluated as they mature towards production readiness.

More about DriveNets Network Cloud-AI, which is based on the largest-scale DDC (Distributed Disaggregated Chassis) architectures and implemented with scheduled fabric, can be found here.

FAQs for AI Networking

- What are two types of AI networking options?

One is a smart network interface card (NIC), which holds network data and applies decisions on the link between servers and top-of-rack (ToR) switches in order to improve throughput. The other is a scheduled fabric, which provides a “blind” bandwidth pipe between all ToR devices regardless of traffic patterns. - What provides the shortest job completion time possible?

It’s up to the network (the thing that connects those hundreds of compute devices) to provide the adequate resources that will enable the shortest job completion time (JCT). - What are the cons to Smart NICs?

NICs are pricey, and they heavily boost power consumption. They are offered by a few vendors, with different implementation and unique telemetry, hindering interoperability. The generated insights are also vendor-specific.

Additional Resources for AI Networking

Download white paper

Utilizing Distributed Disaggregated Chassis (DDC) for Back-End AI Networking Fabric