I had the opportunity to dive deep into the latest advancements in networking, power, data center design, and, of course, the future of AI infrastructure. Interestingly, the most pervasive buzz throughout the conference halls was “networking is cool again!”

I don’t know about you, but I always thought networking was cool. In my upcoming posts, I’ll be dissecting the key takeaways I took from the event, exploring groundbreaking advancements in networking, power management, data center design, and, of course, the evolution of AI infrastructure.

Here are some of the most impactful insights I gained during NVIDIA GTC 2024!

Know Your AI Models

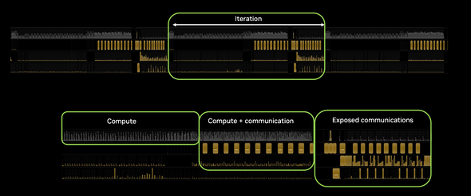

The key to a high-performance AI network lies in understanding communication patterns, not just the network itself. This involves analyzing your GPU workloads (training vs. inference) and their communication patterns (all-reduce, all-to-all, etc.) and data flows. You’ll need to balance these requirements across various network options while anticipating potential bottlenecks.

Critical factors include understanding your language model iterations, bandwidth usage across intra and inter-node networks, traffic bursts, how failures impact model performance, and the level of redundancy and resiliency needed. Without a deep grasp of network traffic patterns, you risk building an infrastructure that throttles your AI model’s performance.

This level of expertise is often found with network engineers at major cloud providers who have the resources to fine-tune models and infrastructure for peak performance. However, for many other companies building or planning AI networks, the resources or deep understanding of this complexity might be limited.

Fortunately, there are options beyond expensive vendor professional services or standard Ethernet CLOS networks with potential operational shortcomings. High-performance Ethernet solutions, like DriveNets’ Network Cloud-AI, offer built-in congestion control mechanisms. This provides lossless, Ethernet-based performance using standard white box hardware. Network Cloud-AI boasts ultra-fast failover (under 1ms, exceeding both InfiniBand and standard CLOS) and scales to support massive deployments of 32,000 GPUs at 800 Gbps speeds. Additionally, scheduled fabric solutions eliminate the need for constant network parameter adjustments with each new model due to their predictable, per-queue Virtual Output Queues.

In essence, by prioritizing a thorough understanding of your AI workloads and network traffic patterns, you can choose the most suitable and efficient network solution for your specific needs.

Image 1 – NVIDIA – LLM compute and communication profiling (NVIDIA GTC)

The Network is the Super Computer

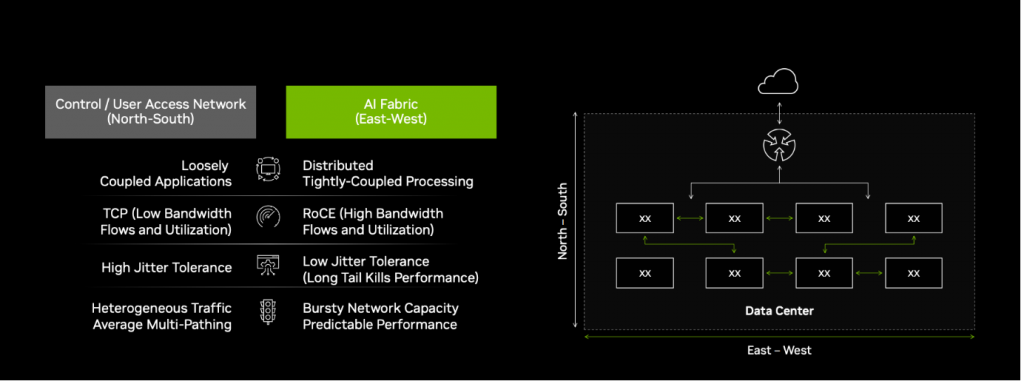

An AI network goes beyond a traditional network; it’s a multi-layered supercomputer with intricate connectivity. Unlike a simple “back-end” with high-bandwidth connections, an AI network utilizes a complex fabric of interconnected layers.

The first layer focuses on internal communication within a node (scale-up) using specialized technologies like NVLink, Infinity Fabric, or PCIe for connections between GPUs, CPUs, storage, and NICs. The next layer handles communication between nodes in a cluster (scale-out) leveraging solutions like NVlink Switches, InfiniBand, or Ethernet for communication between GPUs, DPUs, and storage. Critically, each layer requires dedicated management considering factors like latency, jitter, bandwidth, and resiliency. These layers must also seamlessly coexist and support each other within this intricate network architecture.

Beyond these core layers, additional networks exist for out-of-band management and BMC (Baseboard Management Controller) connectivity. These networks support operational tasks like life cycle management, monitoring, telemetry, and provisioning.

Designing an AI network is a far cry from deploying a pure CLOS or Fat-Tree topology commonly used in cloud computing. Network architects building AI networks must possess a deep understanding of the detailed design of every component, from the GPU to the CPU, storage, interconnect fabrics, and beyond. This comprehensive knowledge is crucial to optimize communication patterns, minimize latency, and ensure efficient data flow throughout the entire multi-layered supercomputer.

Image 2 – NVIDIA – Entering A New Frontier of AI Networking Innovation (NVIDIA GTC)

Power is the Name of the ‘Networking’ Game

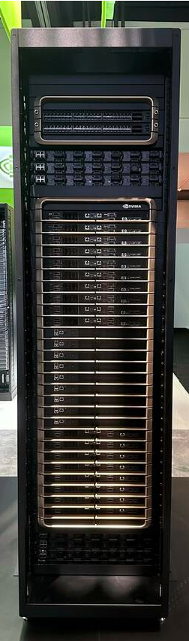

The surge in power demands with new GPUs like the B100 (exceeding 1000W in some configurations and requiring liquid cooling) is pushing data center providers to build massive facilities capable of supporting such needs. NVIDIA DGX GB200 NVL72 solution requiring over 120kW in a single rack! The immediate thought i had in mind was “who can really support this requirement other than the top hyperscalers”?

Image 3 – NVIDIA DGX GB200 NVL72 (NVIDIA GTC)

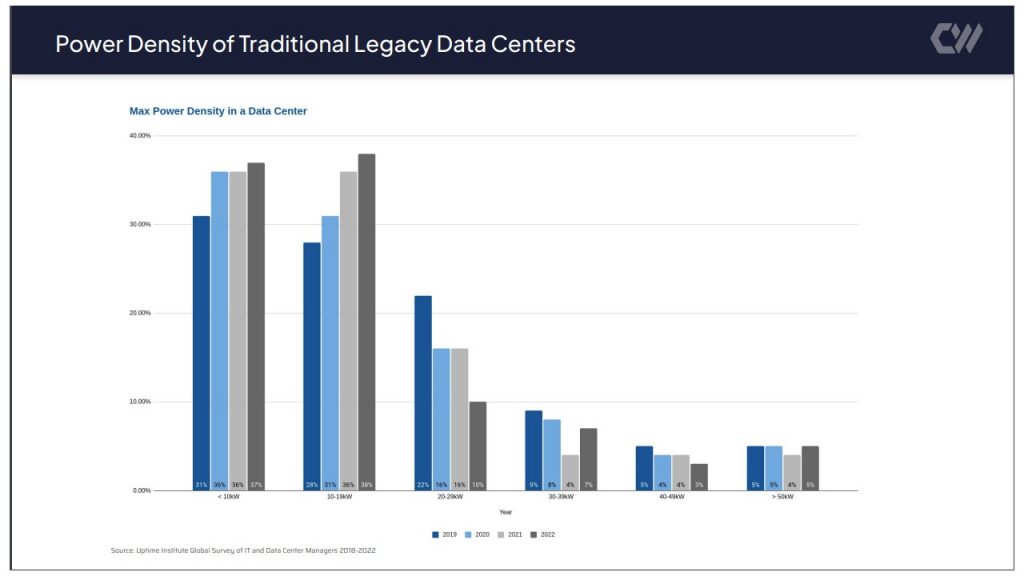

To my surprise, CoreWeave, a specialized cloud computing provider focusing on delivering large scale of GPUs, mentioned plans to build 100Kw air-cooled and 300kW liquid-cooled racks and supporting data center capacity at 300MW. For reference, CoreWeave has shared that just up to 2 years ago, there were mere 5% of DC supporting 50KW per rack. The power requirement more than doubles in the past 24 months.

Image 4 – CoreWeave – The Redesign of the Data Center Has Already Started (NVIDIA GTC)

There is a tight correlation between the AI network architecture and the power capacity of the rack. Higher capacity per rack allows for stacking more GPU nodes, enabling the use of copper cabling within the rack to connect to a Top-of-Rack (TOR) or leaf switch, instead of power-hungry optical transceivers. Copper offers a significant power reduction compared to optics, especially when designing very large GPU training systems that require tens of thousands of networking ports, consuming a massive amount of power.

This new requirement for significant power per rack presents challenges, as only a few providers can currently handle the high demands of large GPU clusters (thousands of units) within a data center.

As an example, racks limited to 15KW or 30KW can only accommodate 1-2 NVIDIA HGX/DGX servers. Connecting hundreds of such servers across racks requires a design with Top-of-Rack (TOR) switches for every 1-2 racks. This allows for copper Direct Attach Copper (DAC) cabling, but for longer distances between racks, optics might be necessary, as with the NVIDIA Rail-optimized design.

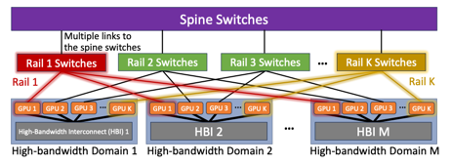

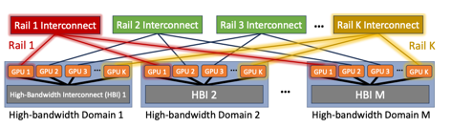

There are several reference architectures deployed today, such as the non-blocking Fat-Tree topology commonly used in most hyperscalers’ data centers. Unique architectures also exist, like the NVIDIA Rail-optimized design, which prioritizes communication between specific groups of GPUs (ranks) to reduce overall network traffic. Additionally, a recent paper by META (“HOW TO BUILD LOW-COST NETWORKS FOR LARGE LANGUAGE MODELS“) explores bi-sectional bandwidth demands and suggests using High Bandwidth (HB) domains based on interconnect solutions like NVIDIA NVLINK and NVSwitch instead of building large Ethernet networks with full any-to-any connectivity.

In conclusion, designing an AI network must consider the unique demands of the GPU system, including rack power capacity, distance between racks, and communication patterns. Future articles will delve deeper into these different network architectures and their impact on data center costs and power consumption.

Image 5 – Rail-Optimized Architecture

(HOW TO BUILD LOW-COST NETWORKS FOR LARGE LANGUAGE MODELS)

Image 6 – Rail-only Architecture

(HOW TO BUILD LOW-COST NETWORKS FOR LARGE LANGUAGE MODELS)

The 800G Race is On!

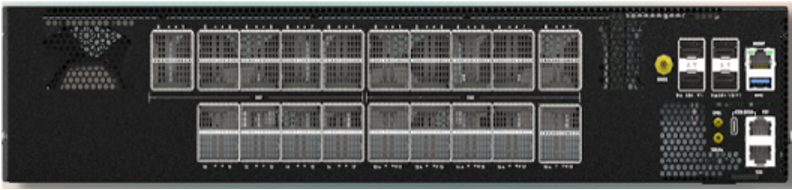

NVIDIA has entered the 800 Gbps networking race with their Quantum-X800 InfiniBand (IB) switches and Spectrum-X800 Ethernet platforms. Previously, competitors offered a maximum throughput of 51.2T per switch, but the Quantum-X800 boasts a significant leap with 144×800 Gbps ports and the potential for 10,368 x 800G GPU connections. Notably, these switches utilize 224 Gbps SerDes technology (also under development by others), but keep in mind the time between announcement and actual product availability.

NVIDIA further expands their offerings with the ConnectX-8 NIC adapters supporting 800G speeds. This highlights their focus on a fully integrated solution, but potential customers should be aware that ConnectX-8 NICs require compatible optical connections to function with any vendor’s network switches. Like the transition from CX-7 to CX-8, NVIDIA might favor their LinkX brand for optics, although alternatives exist with potentially limited compatibility.

For those seeking immediate access to 800G networking, DriveNets Network Cloud-AI based on Broadcom J3AI and R3 chipsets is available TODAY. This solution offers 800G connectivity across various networking platforms and supports an open eco-system of optics solution from various vendors.

Image – 7 – DriveNets Network Cloud–AI Leaf – NCP5-AI

Image 8 – DriveNets Network Cloud–AI Spine – NCF2-AI

Download white paper

Distributed Disaggregated Chassis (DDC) as an Effective Interconnect for Large AI Compute Clusters